Benchmarking: retrieving and comparing against reference results¶

You can access all the latest results for classification, clustering and regression directly with aeon. These results are all stored on the website timeseriesclassification.com. This notebook is about recovering the latest results. Because of software changes, these may vary slightly from published results. If you want to recover results published in one of our bake offs, see the notebook Loading bake off results. We update the results as we get them. If you want to see the latest results, there are listed here Latest results or just call the function below.

[18]:

from aeon.benchmarking import get_available_estimators

cls = get_available_estimators(task="classification")

print(len(cls), " classifier results available\n", cls)

40 classifier results available

classification

0 1NN-DTW

1 Arsenal

2 BOSS

3 CIF

4 CNN

5 Catch22

6 DrCIF

7 EE

8 FreshPRINCE

9 GRAIL

10 H-InceptionTime

11 HC1

12 HC2

13 Hydra

14 InceptionTime

15 LiteTime

16 MR

17 MR-Hydra

18 MiniROCKET

19 MrSQM

20 PF

21 QUANT

22 R-STSF

23 RDST

24 RISE

25 RIST

26 ROCKET

27 RSF

28 ResNet

29 STC

30 STSF

31 ShapeDTW

32 Signatures

33 TDE

34 TS-CHIEF

35 TSF

36 TSFresh

37 WEASEL-1.0

38 WEASEL-2.0

39 cBOSS

[19]:

reg = get_available_estimators(task="regression")

print(len(reg), " regressor results available\n", reg)

19 regressor results available

regression

0 1NN-DTW

1 1NN-ED

2 5NN-DTW

3 5NN-ED

4 CNN

5 DrCIF

6 FCN

7 FPCR

8 FPCR-b-spline

9 FreshPRINCE

10 GridSVR

11 InceptionTime

12 RandF

13 ResNet

14 Ridge

15 ROCKET

16 RotF

17 SingleInceptionTime

18 XGBoost

[20]:

clst = get_available_estimators(task="clustering", return_dataframe=False)

print(len(clst), " clustering results available\n", clst)

21 clustering results available

['dtw-dba', 'kmeans-ddtw', 'kmeans-dtw', 'kmeans-ed', 'kmeans-edr', 'kmeans-erp', 'kmeans-lcss', 'kmeans-msm', 'kmeans-twe', 'kmeans-wddtw', 'kmeans-wdtw', 'kmedoids-ddtw', 'kmedoids-dtw', 'kmedoids-ed', 'kmedoids-edr', 'kmedoids-erp', 'kmedoids-lcss', 'kmedoids-msm', 'kmedoids-twe', 'kmedoids-wddtw', 'kmedoids-wdtw']

Classification example¶

We will use the classification task as an example. We will recover the results for FreshPRINCE [4] is a pipeline of TSFresh transform followed by a rotation forest classifier. InceptionTimeClassifier [5] is a deep learning ensemble. HIVECOTEV2 [6] is a meta ensemble of four different ensembles built on different representations. WEASEL2 [7] overhauls original WEASEL using dilation and ensembling randomized hyper-parameter settings.

See [1] for an overview of recent advances in time series classification.

[21]:

from aeon.benchmarking.results_loaders import (

get_estimator_results,

get_estimator_results_as_array,

)

from aeon.visualisation import (

plot_boxplot_median,

plot_critical_difference,

plot_pairwise_scatter,

)

classifiers = [

"FreshPRINCEClassifier",

"HIVECOTEV2",

"InceptionTimeClassifier",

"WEASEL-Dilation",

]

datasets = ["ACSF1", "ArrowHead", "GunPoint", "ItalyPowerDemand"]

# get results. To read locally, set the path variable.

# If you do not set path, results are loaded from

# https://timeseriesclassification.com/results/ReferenceResults.

# You can download the files directly from there

default_split_all, data_names = get_estimator_results_as_array(estimators=classifiers)

print(

" Returns an array with each column an estimator, shape (data_names, classifiers)"

)

print(

f"By default recovers the default test split results for {len(data_names)} "

f"equal length UCR datasets."

)

default_split_some, names = get_estimator_results_as_array(

estimators=classifiers, datasets=datasets

)

print(

f"Or specify datasets for result recovery. For example, {len(names)} datasets. "

f"HIVECOTEV2 accuracy {names[3]} = {default_split_some[3][1]}"

)

Returns an array with each column an estimator, shape (data_names, classifiers)

By default recovers the default test split results for 112 equal length UCR datasets.

Or specify datasets for result recovery. For example, 4 datasets. HIVECOTEV2 accuracy ItalyPowerDemand = 0.9698736637512148

If you have any questions about these results or the datasets, please raise an issue on the associated repo. You can also recover results in a dictionary, where each key is a classifier name, and the values is a dictionary of problems/results.

[22]:

hash_table = get_estimator_results(estimators=classifiers)

print("Keys = ", hash_table.keys())

print(

"Accuracy of HIVECOTEV2 on ItalyPowerDemand = ",

hash_table["HIVECOTEV2"]["ItalyPowerDemand"],

)

Keys = dict_keys(['FreshPRINCEClassifier', 'HIVECOTEV2', 'InceptionTimeClassifier', 'WEASEL-Dilation'])

Accuracy of HIVECOTEV2 on ItalyPowerDemand = 0.9698736637512148

The results recovered so far have all been on the default train/test split. If we merge train and test data and resample, you can get very different results. To allow for this, we average results over 30 resamples. You can recover these averages by setting the default_only parameter to False.

[23]:

resamples_all, data_names = get_estimator_results_as_array(

estimators=classifiers, default_only=False

)

print("Results are averaged over 30 stratified resamples.")

print(

f" HIVECOTEV2 default train test partition of {data_names[3]} = "

f"{default_split_all[3][1]} and averaged over 30 resamples = "

f"{resamples_all[3][1]}"

)

Results are averaged over 30 stratified resamples.

HIVECOTEV2 default train test partition of DiatomSizeReduction = 0.9705882352941176 and averaged over 30 resamples = 0.9290849673202614

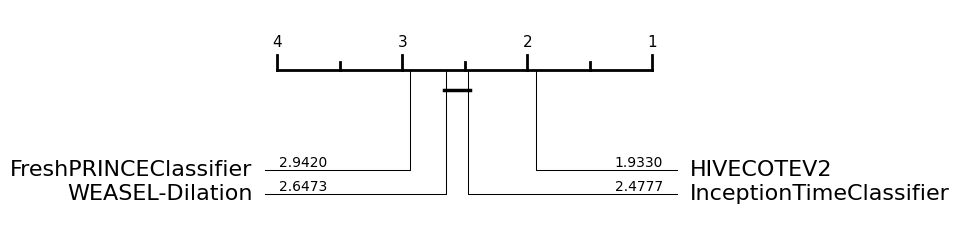

So once you have the results you want, you can compare classifiers with built in aeon tools. For example, you can draw a critical difference diagram [7]. This displays the average rank of each estimator over all datasets. It then groups estimators for which there is no significant difference in rank into cliques, shown with a solid bar. So in the example below with the default train test splits, FreshPRINCEClassifier and WEASEL-Dilation are not significantly different in ranks to InceptionTimeClassifier, but HIVECOTEV2 is significantly better. The diagram below has been performed using pairwise Wilcoxon signed-rank tests and forms cliques using the Holm correction for multiple testing as described in [8, 9]. Alpha value is 0.05 (default value).

[24]:

plot = plot_critical_difference(

default_split_all, classifiers, test="wilcoxon", correction="holm"

)

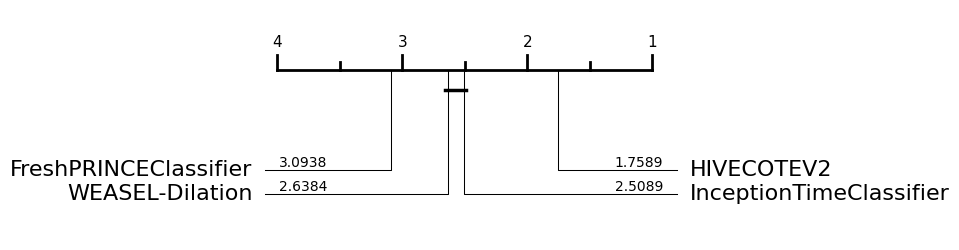

If we use the data averaged over resamples, we can detect differences more clearly. Now we see WEASEL-Dilation and InceptionTimeClassifier are significantly better than the FreshPRINCEClassifier.

[25]:

plot = plot_critical_difference(

resamples_all, classifiers, test="wilcoxon", correction="holm"

)

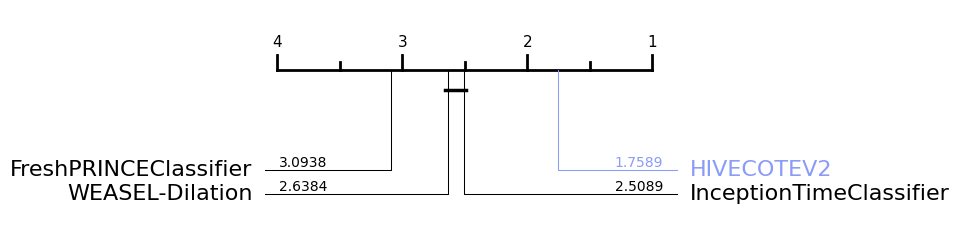

If we want to highlight a specific classifier, we have the highlight parameter, which is a dict including the classifier that we would like to highlight and the colour selected, such as: highlight={HIVECOTEV2: "#8a9bf8"}

[26]:

plot = plot_critical_difference(

resamples_all,

classifiers,

test="wilcoxon",

correction="holm",

highlight={"HIVECOTEV2": "#8a9bf8"},

)

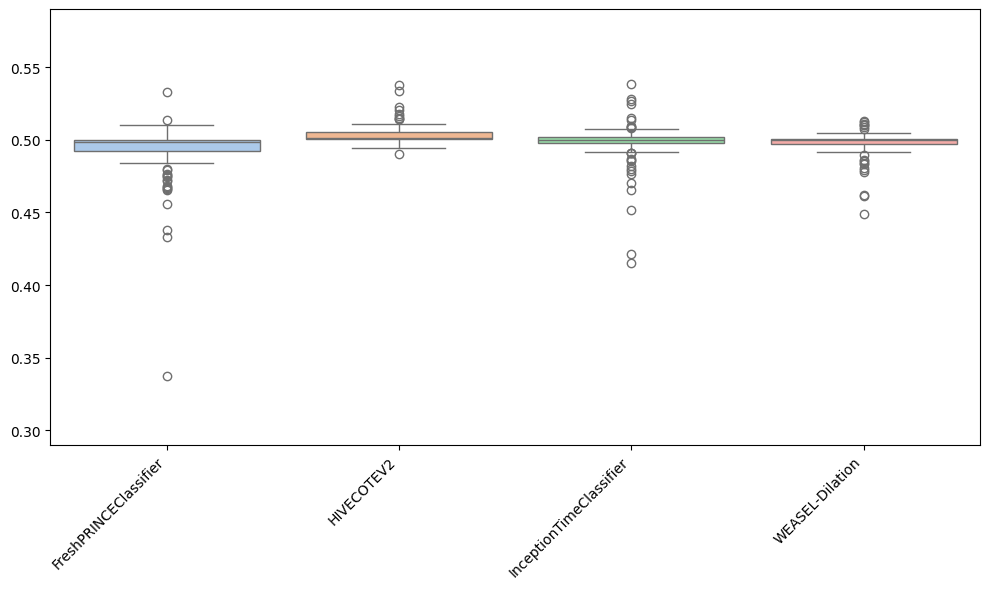

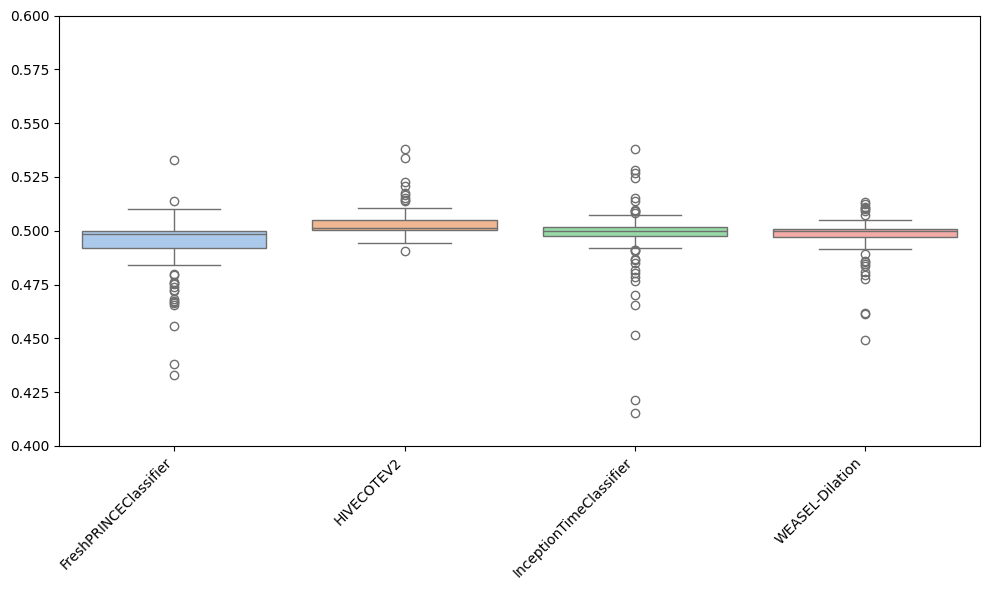

Besides plotting differences using the critical difference diagrams, different versions of boxplots can be plotted. Boxplots graphically demonstrates the locality, spread and skewness of the results. In this case, it plot a boxplot of distributions from the median. A value above 0.5 means the algorithm is better than the median accuracy for that particular problem.

[27]:

plot = plot_boxplot_median(

resamples_all,

classifiers,

plot_type="boxplot",

outliers=True,

)

As can be observed, the results achieved by the FreshPRINCEClassifier are more spreaded than the rest. Furthermore, it can be seen that most results for HC2 are above 0.5, which indicates that for most datasets, HC2 is better.

There are some more options to play with in this function. For example, to specify the values for the y-axis:

[28]:

plot = plot_boxplot_median(

resamples_all,

classifiers,

plot_type="boxplot",

outliers=True,

y_min=0.4,

y_max=0.6,

)

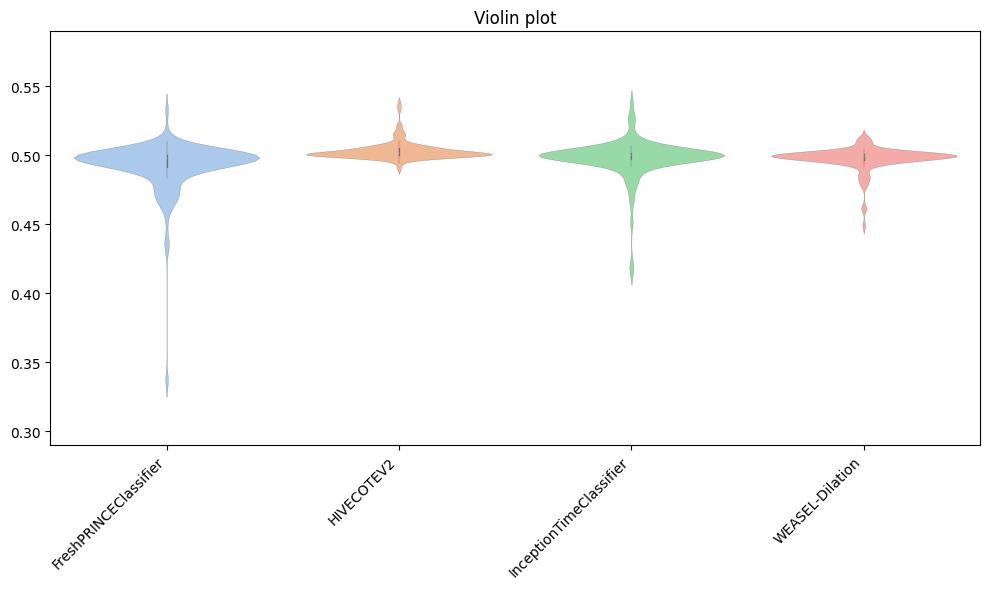

Apart from well-known boxplots, different versions can be plotted, depending on the purpose of the user: - violin is a hybrid of a boxplot and a kernel density plot, showing peaks in the data. - swarm is a scatterplot with points adjusted to be non-overlapping. - strip is similar to swarm but uses jitter to reduce overplotting.

Below, we show an example of the violin one, including a title.

[29]:

plot = plot_boxplot_median(

resamples_all,

classifiers,

plot_type="violin",

title="Violin plot",

)

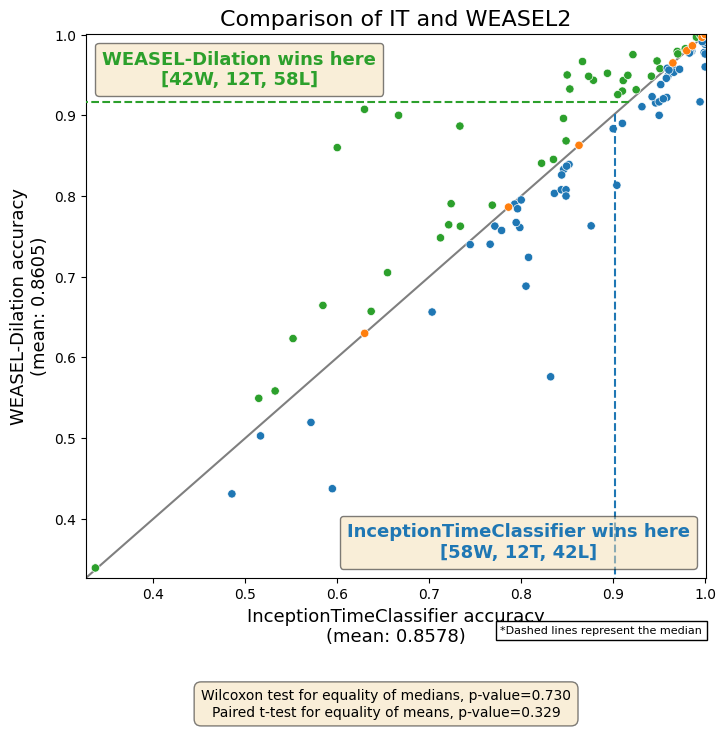

From the critical difference diagram above, we showed that InceptionTimeClassifier is not significantly better than WEASEL-Dilation. Now, if we want to specifically compare the results of these two approaches, we can plot a scatter in which each point is a pair of accuracies of both approaches. The number of W, T, and L is also included per approach in the legend.

[30]:

methods = ["InceptionTimeClassifier", "WEASEL-Dilation"]

results, datasets = get_estimator_results_as_array(estimators=methods)

results = results.T

fig, ax = plot_pairwise_scatter(

results[0],

results[1],

methods[0],

methods[1],

title="Comparison of IT and WEASEL2",

)

fig.show()

C:\Users\Matthew Middlehurst\AppData\Local\Temp\ipykernel_30444\401140627.py:13: UserWarning: FigureCanvasAgg is non-interactive, and thus cannot be shown

fig.show()

timeseriesclassification.com has results for classification, clustering and regression. We are constantly updating the results as we generate them. To find out which estimators have results get_available_estimators

References¶

[1] Middlehurst et al. “Bake off redux: a review and experimental evaluation of recent time series classification algorithms”, 2023, arXiv

[2] Holder et al. “A Review and Evaluation of Elastic Distance Functions for Time Series Clustering”, 2023, arXiv KAIS

[3] Guijo-Rubio et al. “Unsupervised Feature Based Algorithms for Time Series Extrinsic Regression”, 2023 arXiv

[4] Middlehurst and Bagnall, “The FreshPRINCE: A Simple Transformation Based Pipeline Time Series Classifier”, 2022 arXiv

[5] Fawaz et al. “InceptionTime: Finding AlexNet for time series classification”, 2020 DAMI

[6] Middlehurst et al. “HIVE-COTE 2.0: a new meta ensemble for time series classification”, MACH

[7] Schäfer and Leser, “WEASEL 2.0 - A Random Dilated Dictionary Transform for Fast, Accurate and Memory Constrained Time Series Classification”, 2023 arXiv

[8] García and Herrera, “An extension on ‘statistical comparisons of classifiers over multiple data sets’ for all pairwise comparisons”, 2008 JMLR

[9] Benavoli et al. “Should We Really Use Post-Hoc Tests Based on Mean-Ranks?”, 2016 JMLR

[10] Demsar, “Statistical Comparisons of Classifiers over Multiple Data Sets” JMLR

Generated using nbsphinx. The Jupyter notebook can be found here.