Forecasting with aeon¶

In forecasting, past data is used to make predictions about future values of a time series. This is distinct from learning tasks such classification, regression and clustering, where it may not be future values being predicted.

This notebook gives a tutorial on forecasting. More specific notebooks in aeon on forecasting are:

aeon provides a common, scikit-learn-like interface to a variety of classical and ML-style forecasting algorithms, together with tools for building pipelines and composite machine learning models, including temporal tuning schemes, or reductions such as walk-forward application of scikit-learn regressors.

Section 1 provides an overview of common forecasting workflows supported by aeon.

Section 2 discusses the families of forecasters available in aeon.

Section 3 discusses advanced composition patterns, including pipeline building, reduction, tuning, ensembling, and autoML.

Section 4 gives an introduction to how to write custom estimators compliant with the aeon interface.

Table of Contents¶

1. Basic forecasting workflows

1.1 Data container format

1.2 Basic deployment workflow - batch fitting and forecasting

1.2.1 Basic deployment workflow in a nutshell

1.2.2 Forecasters that require the horizon when fitting

1.2.3 Forecasters that can make use of exogeneous data

1.2.4 Multivariate Forecasters

1.2.5 Prediction intervals and quantile forecasts

1.2.6 Panel forecasts and hierarchical forecasts

1.3 Basic evaluation workflow - evaluating a batch of forecasts against ground truth observations

1.3.1 The basic batch forecast evaluation workflow in a nutshell - function metric interface

1.3.2 The basic batch forecast evaluation workflow in a nutshell - metric class interface)

1.4 Advanced deployment workflow: rolling updates & forecasts

1.4.1 Updating a forecaster with the update method

1.4.2 Moving the “now” state without updating the model

1.4.3 Walk-forward predictions on a batch of data

1.5 Advanced evaluation worfklow: rolling re-sampling and aggregate errors, rolling back-testing

2. Forecasters in aeon - searching, tags, common families

2.1 Forecaster lookup - the registry

2.2 Forecaster tags

2.2.1 Capability tags: multivariate, probabilistic, hierarchical

2.2.2 Finding and listing forecasters by tag)

2.2.3 Listing all forecaster tags)

2.3 Common forecaster types

2.3.1 Exponential smoothing, theta forecaster, autoETS from statsmodels

2.3.2 ARIMA and autoARIMA

2.3.3 BATS and TBATS

2.3.4 Facebook prophet

2.3.5 State Space Model (Structural Time Series)

2.3.6 AutoArima from StatsForecast

3. Advanced composition patterns - pipelines, reduction, autoML, and more

3.1 Reduction: from forecasting to regression

3.2 Pipelining, detrending and deseasonalization

3.2.1 The basic forecasting pipeline

3.2.2 The Detrender as pipeline component

3.2.3 Complex pipeline composites and parameter inspection

3.3 Parameter tuning

3.3.1 Basic tuning using ForecastingGridSearchCV

3.3.2 Tuning of complex composites

3.3.3 Selecting the metric and retrieving scores

3.4 autoML aka automated model selection, ensembling and hedging

3.4.1 autoML aka automatic model selection, using tuning plus multiplexer

3.4.2 autoML: selecting transformer combinations via OptimalPassthrough

3.4.3 Simple ensembling strategies

3.4.4 Prediction weighted ensembles and hedge ensembles

Package imports¶

[1]:

import warnings

import numpy as np

import pandas as pd

# hide warnings

warnings.filterwarnings("ignore")

1. Basic forecasting workflows¶

This section explains the basic forecasting workflows, and key interface points for it.

We cover the following four workflows:

Basic deployment workflow: batch fitting and forecasting;

Basic evaluation workflow: evaluating a batch of forecasts against ground truth observations;

Advanced deployment workflow: fitting and rolling updates/forecasts; and

Advanced evaluation worfklow: using rolling forecast splits and computing split-wise and aggregate errors, including common back-testing schemes.

1.1 Data container format¶

All forecasting workflows make common assumptions on the input data format. aeon uses pandas for representing time series for forecasting:

pd.DataFramefor time series and sequences. Rows represent time indices, columns represent variables;pd.Seriescan be used for univariate time series;numpyarrays (1D and 2D) can also used.

The Series.index and DataFrame.index are used for representing the time series index. aeon supports pandas integer, period and timestamp indices for simple time series.

aeon supports additional data structures for panel and hierarchical time series, see the data structure notebooks for more details.

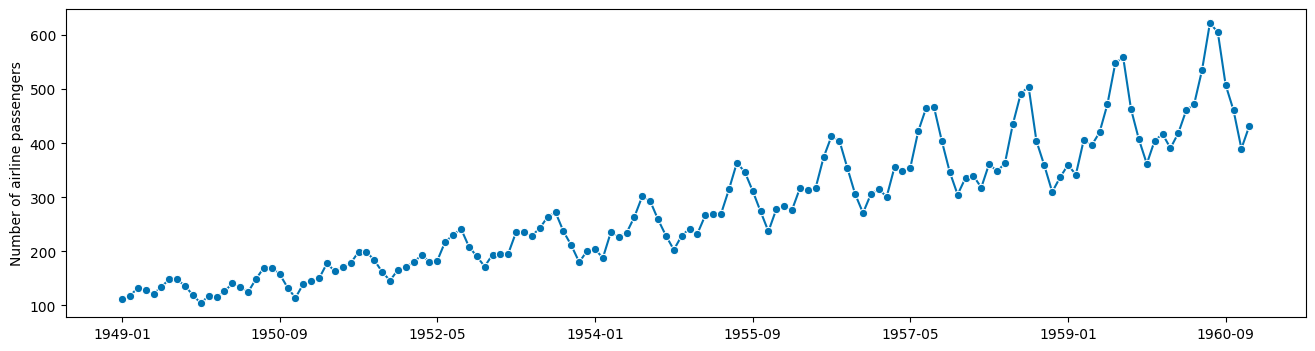

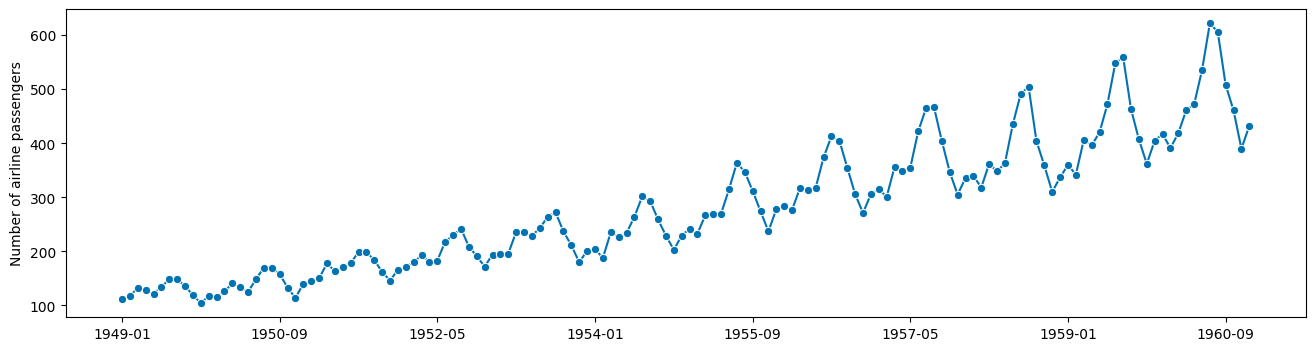

Example: as the running example in this tutorial, we use a textbook data set, the Box-Jenkins airline data set, which consists of the number of monthly totals of international airline passengers, from 1949 - 1960. Values are in thousands. See “Makridakis, Wheelwright and Hyndman (1998) Forecasting: methods and applications”, exercises sections 2 and 3.

[2]:

from aeon.datasets import load_airline

from aeon.visualisation import plot_series

[3]:

y = load_airline()

# plotting for visualization

plot_series(y)

[3]:

(<Figure size 1600x400 with 1 Axes>,

<Axes: ylabel='Number of airline passengers'>)

[4]:

y.index

[4]:

PeriodIndex(['1949-01', '1949-02', '1949-03', '1949-04', '1949-05', '1949-06',

'1949-07', '1949-08', '1949-09', '1949-10',

...

'1960-03', '1960-04', '1960-05', '1960-06', '1960-07', '1960-08',

'1960-09', '1960-10', '1960-11', '1960-12'],

dtype='period[M]', name='Period', length=144)

1.2 Basic deployment workflow - batch fitting and forecasting¶

The simplest use case is batch fitting and forecasting, i.e., fitting a forecasting model to one batch of past data, then asking for forecasts at time point in the future.

The steps in this workflow are as follows:

Preparation of the data

Specification of the time points for which forecasts are requested. This uses a

numpy.arrayor theForecastingHorizonobject.Specification and instantiation of the forecaster. This follows a

scikit-learn-like syntax; forecaster objects follow the familiarscikit-learnBaseEstimatorinterface.Fitting the forecaster to the data, using the forecaster’s

fitmethodMaking a forecast, using the forecaster’s

predictmethod

The below first outlines the vanilla variant of the basic deployment workflow, step-by-step.

At the end, one-cell workflows are provided, with common deviations from the pattern (Sections 1.2.1 and following).

Step 1 - Preparation of the data¶

We assume here the data is assumed to be in pd.Series or pd.DataFrame format.

[5]:

from aeon.datasets import load_airline

from aeon.visualisation import plot_series

[6]:

# in the example, we use the airline data set.

y = load_airline()

plot_series(y)

[6]:

(<Figure size 1600x400 with 1 Axes>,

<Axes: ylabel='Number of airline passengers'>)

Step 2 - Specifying the forecasting horizon¶

Now we need to specify the forecasting horizon and pass that to our forecasting algorithm.

There are two main ways of doing this:

Using a

numpy.arrayof integers. This assumes either integer index or periodic index (PeriodIndex) in the time series; the integer indicates the number of time points or periods ahead we want to make a forecast for. E.g.,1means forecast the next period,2the second next period, and so on.Using a

ForecastingHorizonobject. This can be used to define forecast horizons, using any supported index type as an argument. No periodic index is assumed.

Forecasting horizons can be absolute, i.e., referencing specific time points in the future, or relative, i.e., referencing time differences to the present. As a default, the present is that latest time point seen in any y passed to the forecaster.

numpy.array based forecasting horizons are always relative; ForecastingHorizon objects can be both relative and absolute. In particular, absolute forecasting horizons can only be specified using ForecastingHorizon.

Using a numpy forecasting horizon¶

[7]:

fh = np.arange(1, 37)

fh

[7]:

array([ 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17,

18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34,

35, 36])

This will ask for monthly predictions for the next three years, since the original series period is 1 month. To predict only the second and fifth month ahead, one would pass a list as follows:

import numpy as np

fh = np.array([2, 5]) # 2nd and 5th step ahead

Using a ForecastingHorizon based forecasting horizon¶

The ForecastingHorizon object considers the input absolute or relative depending on the is_relative flag.

ForecastingHorizon will automatically assume a relative horizon if temporal difference types from pandas are passed; if value types from pandas are passed, it will assume an absolute horizon.

To define an absolute ForecastingHorizon in our example:

[8]:

from aeon.forecasting.base import ForecastingHorizon

[9]:

fh = ForecastingHorizon(

pd.PeriodIndex(pd.date_range("1961-01", periods=36, freq="M")), is_relative=False

)

fh

[9]:

ForecastingHorizon(['1961-01', '1961-02', '1961-03', '1961-04', '1961-05', '1961-06',

'1961-07', '1961-08', '1961-09', '1961-10', '1961-11', '1961-12',

'1962-01', '1962-02', '1962-03', '1962-04', '1962-05', '1962-06',

'1962-07', '1962-08', '1962-09', '1962-10', '1962-11', '1962-12',

'1963-01', '1963-02', '1963-03', '1963-04', '1963-05', '1963-06',

'1963-07', '1963-08', '1963-09', '1963-10', '1963-11', '1963-12'],

dtype='period[M]', is_relative=False)

A ForecastingHorizon can be converted from relative to absolute and back via the to_relative and to_absolute methods. Both of these conversions require a compatible cutoff to be passed:

[10]:

cutoff = pd.Period("1960-12", freq="M")

[11]:

fh.to_relative(cutoff)

[11]:

ForecastingHorizon([ 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18,

19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36],

dtype='int32', is_relative=True)

[12]:

fh.to_absolute(cutoff)

[12]:

ForecastingHorizon(['1961-01', '1961-02', '1961-03', '1961-04', '1961-05', '1961-06',

'1961-07', '1961-08', '1961-09', '1961-10', '1961-11', '1961-12',

'1962-01', '1962-02', '1962-03', '1962-04', '1962-05', '1962-06',

'1962-07', '1962-08', '1962-09', '1962-10', '1962-11', '1962-12',

'1963-01', '1963-02', '1963-03', '1963-04', '1963-05', '1963-06',

'1963-07', '1963-08', '1963-09', '1963-10', '1963-11', '1963-12'],

dtype='period[M]', is_relative=False)

Step 3 - Specifying the forecasting algorithm¶

To make forecasts, a forecasting algorithm needs to be specified. All aeon forecasters follow the same interface, so the steps are the same, no matter which forecaster is chosen.

For this example, we choose the naive forecasting method of predicting the last seen value. More complex specifications are possible, using pipeline and reduction construction syntax; this will be covered later in Section 2.

[13]:

from aeon.forecasting.naive import NaiveForecaster

[14]:

forecaster = NaiveForecaster(strategy="last")

Step 4 - Fitting the forecaster to the seen data¶

Now the forecaster needs to be fitted to the seen data:

[15]:

forecaster.fit(y)

[15]:

NaiveForecaster()In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

NaiveForecaster()

Step 5 - Requesting forecasts¶

Finally, we request forecasts for the specified forecasting horizon. This needs to be done after fitting the forecaster:

[16]:

y_pred = forecaster.predict(fh)

[17]:

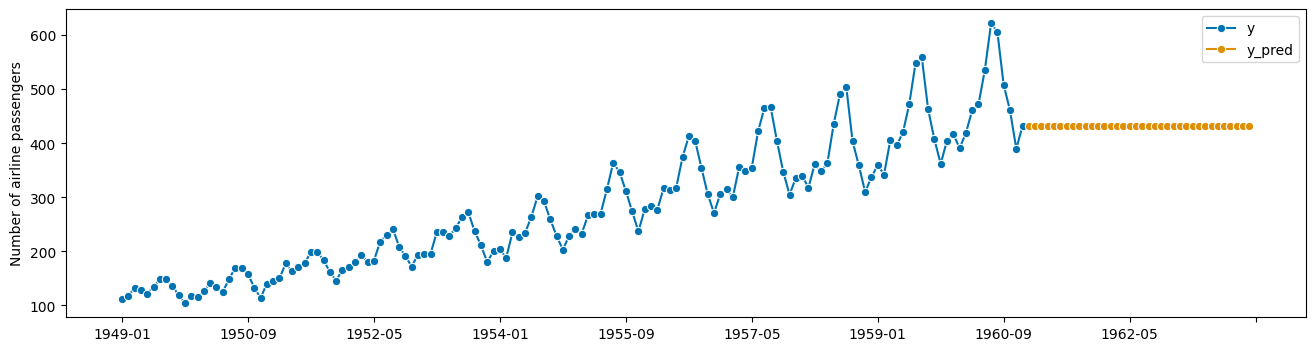

# plotting predictions and past data

plot_series(y, y_pred, labels=["y", "y_pred"])

[17]:

(<Figure size 1600x400 with 1 Axes>,

<Axes: ylabel='Number of airline passengers'>)

1.2.1 The basic deployment workflow in a nutshell¶

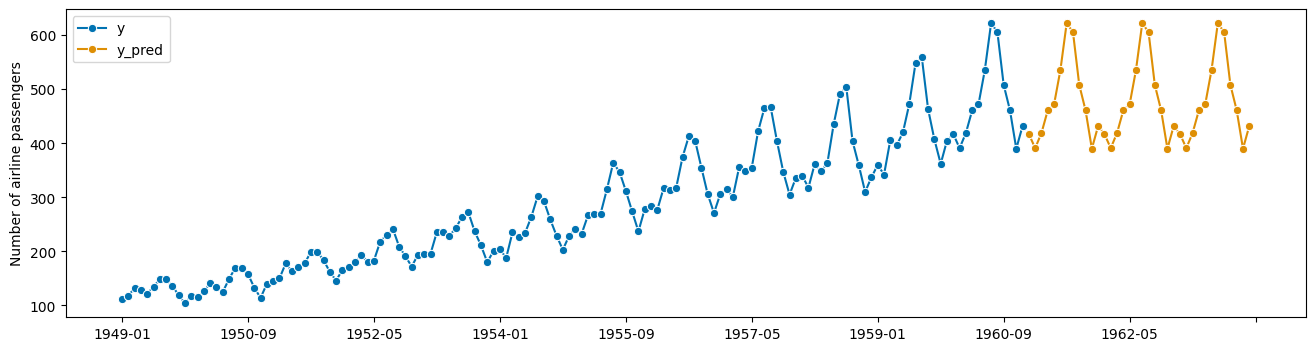

For convenience, we present the basic deployment workflow in one cell. This uses the same data, but different version of the NiaveForecaster that predicts the latest value observed in the same month (the parameter sp is the seasonal periodicity).

[18]:

from aeon.datasets import load_airline

from aeon.forecasting.base import ForecastingHorizon

from aeon.forecasting.naive import NaiveForecaster

[19]:

# step 1: data specification

y = load_airline()

# step 2: specifying forecasting horizon

fh = np.arange(1, 37)

# step 3: specifying the forecasting algorithm

forecaster = NaiveForecaster(strategy="last", sp=12)

# step 4: fitting the forecaster

forecaster.fit(y)

# step 5: querying predictions

y_pred = forecaster.predict(fh)

[20]:

# optional: plotting predictions and past data

plot_series(y, y_pred, labels=["y", "y_pred"])

[20]:

(<Figure size 1600x400 with 1 Axes>,

<Axes: ylabel='Number of airline passengers'>)

1.2.2 Forecasters that require the horizon already in fit¶

Some forecasters need the forecasting horizon to be provided in fit. Such forecasters will produce informative error messages when it is not passed in fit. All forecaster will remember the horizon when passed in fit for prediction. An example:

[21]:

# step 1: data specification

y = load_airline()

# step 2: specifying forecasting horizon

fh = np.arange(1, 37)

# step 3: specifying the forecasting algorithm

forecaster = NaiveForecaster(strategy="last", sp=12)

# step 4: fitting the forecaster

forecaster.fit(y, fh=fh)

# step 5: querying predictions

y_pred = forecaster.predict()

1.2.3 Forecasters that can make use of exogeneous data¶

Many forecasters can make use of exogeneous time series, i.e., other time series that are not to be forecast, but are useful for forecasting y. Exogeneous time series are always passed as an X argument, in fit, predict, and other methods (see below). Exogeneous time series should always be passed as pandas.DataFrames. Most forecasters that can deal with exogeneous time series will assume that the time indices of X passed to fit are a super-set of the time indices in

y passed to fit; and that the time indices of X passed to predict are a super-set of time indices in fh, although this is not a general interface restriction. Forecasters that do not make use of exogeneous time series still accept the argument (and do not use it internally).

The general workflow for passing exogeneous data is as follows:

[22]:

# step 1: data specification

y = load_airline()

# we create some dummy exogeneous data

X = pd.DataFrame(index=y.index)

# step 2: specifying forecasting horizon

fh = np.arange(1, 37)

# step 3: specifying the forecasting algorithm

forecaster = NaiveForecaster(strategy="last", sp=12)

# step 4: fitting the forecaster

forecaster.fit(y, X=X, fh=fh)

# step 5: querying predictions

y_pred = forecaster.predict(X=X)

NOTE: as in workflows 1.2.1 and 1.2.2, some forecasters that use exogeneous variables may also require the forecasting horizon only in predict. Such forecasters may also be called with steps 4 and 5 being

forecaster.fit(y, X=X)

y_pred = forecaster.predict(fh=fh, X=X)

1.2.4. Multivariate forecasting¶

All forecasters in aeon support multivariate forecasts - some forecasters are “genuine” multivariate, all others “apply by column”.

Below is an example of the general multivariate forecasting workflow, using the VAR (vector auto-regression) forecaster on the Longley dataset from aeon.datasets. The workflow is the same as in the univariate forecasters, but the input has more than one variables (columns).

[23]:

from aeon.datasets import load_longley

from aeon.forecasting.var import VAR

_, y = load_longley()

y = y.drop(columns=["UNEMP", "ARMED", "POP"])

forecaster = VAR()

forecaster.fit(y, fh=[1, 2, 3])

y_pred = forecaster.predict()

The input to the multivariate forecaster y is a pandas.DataFrame where each column is a variable.

[24]:

y

[24]:

| GNPDEFL | GNP | |

|---|---|---|

| Period | ||

| 1947 | 83.0 | 234289.0 |

| 1948 | 88.5 | 259426.0 |

| 1949 | 88.2 | 258054.0 |

| 1950 | 89.5 | 284599.0 |

| 1951 | 96.2 | 328975.0 |

| 1952 | 98.1 | 346999.0 |

| 1953 | 99.0 | 365385.0 |

| 1954 | 100.0 | 363112.0 |

| 1955 | 101.2 | 397469.0 |

| 1956 | 104.6 | 419180.0 |

| 1957 | 108.4 | 442769.0 |

| 1958 | 110.8 | 444546.0 |

| 1959 | 112.6 | 482704.0 |

| 1960 | 114.2 | 502601.0 |

| 1961 | 115.7 | 518173.0 |

| 1962 | 116.9 | 554894.0 |

The result of the multivariate forecaster y_pred is a pandas.DataFrame where columns are the predicted values for each variable. The variables in y_pred are the same as in y, the input to the multivariate forecaster.

[25]:

y_pred

[25]:

| GNPDEFL | GNP | |

|---|---|---|

| Period | ||

| 1963 | 121.688295 | 578514.398653 |

| 1964 | 124.353664 | 601873.015890 |

| 1965 | 126.847886 | 625411.588754 |

There are two categories of multivariate forecasters:

forecasters that are genuinely multivariate, such as

VAR. Forecasts for one endogeneous (y) variable will depend on values of other variables (X).forecasters that are univariate, such as

ARIMA. Forecasts will be made by endogeneous (y) variable, and not be affected by other variables (X).

To display complete list of multivariate forecasters, search for forecasters with 'multivariate' or 'both' tag value for the tag 'y_input_type', as follows:

[26]:

import warnings

from aeon.registry import all_estimators

warnings.filterwarnings("ignore")

for forecaster in all_estimators(

filter_tags={"y_input_type": ["multivariate", "both"]}

):

print(forecaster[0])

ColumnEnsembleForecaster

DynamicFactor

EnsembleForecaster

ForecastByLevel

ForecastingGridSearchCV

ForecastingPipeline

ForecastingRandomizedSearchCV

HierarchyEnsembleForecaster

MockForecaster

MultiplexForecaster

Permute

TransformedTargetForecaster

VAR

VARMAX

VECM

YtoX

Univariate forecasters have tag value 'univariate', and will fit one model per column. To access the column-wise models, access the forecasters_ parameter, which stores the fitted forecasters in a pandas.DataFrame, fitted forecasters being in the column with the variable for which the forecast is being made:

[27]:

from aeon.datasets import load_longley

from aeon.forecasting.arima import ARIMA

_, y = load_longley()

y = y.drop(columns=["UNEMP", "ARMED", "POP"])

forecaster = ARIMA()

forecaster.fit(y, fh=[1, 2, 3])

forecaster.forecasters_

[27]:

| GNPDEFL | GNP | |

|---|---|---|

| forecasters | ARIMA() | ARIMA() |

1.2.5 Probabilistic forecasting: prediction intervals, quantile, variance, and distributional forecasts¶

aeon provides a unified interface to make probabilistic forecasts. The following methods are possibly available for probabilistic forecasts:

predict_intervalproduces interval forecasts. Additionally to anypredictarguments, an argumentcoverage(nominal interval coverage) must be provided.predict_quantilesproduces quantile forecasts. Additionally to anypredictarguments, an argumentalpha(quantile values) must be provided.predict_varproduces variance forecasts. This has same arguments aspredict.predict_probaproduces full distributional forecasts. This has same arguments aspredict.

Not all forecasters are capable of returning probabilistic forecast, but if a forecasters provides one kind of probabilistic forecast, it is also capable of returning the others. The list of forecasters with such capability can be queried by registry.all_estimators, searching for those where the capability:pred_int tag has valueTrue.

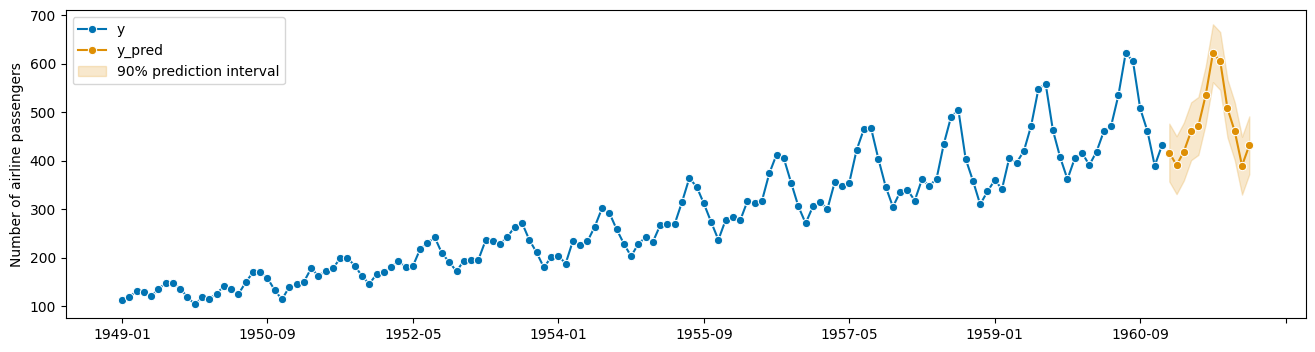

The basic worfklow for probabilistic forecasts is similar to the basic forecasting workflow, with the difference that instead of predict, one of the probabilistic forecasting methods is used:

[28]:

import numpy as np

from aeon.datasets import load_airline

from aeon.forecasting.naive import NaiveForecaster

# until fit, identical with the simple workflow

y = load_airline()

fh = np.arange(1, 13)

forecaster = NaiveForecaster(sp=12)

forecaster.fit(y, fh=fh)

[28]:

NaiveForecaster(sp=12)In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

NaiveForecaster(sp=12)

Now we present the different probabilistic forecasting methods.

predict_interval - interval predictions¶

predict_interval takes an argument coverage, which is a float (or list of floats), the nominal coverage of the prediction interval(s) queried. predict_interval produces symmetric prediction intervals, for example, a coverage of 0.9 returns a “lower” forecast at quantile 0.5 - coverage/2 = 0.05, and an “upper” forecast at quantile 0.5 + coverage/2 = 0.95.

[29]:

coverage = 0.9

y_pred_ints = forecaster.predict_interval(coverage=coverage)

y_pred_ints

[29]:

| Coverage | ||

|---|---|---|

| 0.9 | ||

| lower | upper | |

| 1961-01 | 357.265915 | 476.734085 |

| 1961-02 | 331.265915 | 450.734085 |

| 1961-03 | 359.265915 | 478.734085 |

| 1961-04 | 401.265915 | 520.734085 |

| 1961-05 | 412.265915 | 531.734085 |

| 1961-06 | 475.265915 | 594.734085 |

| 1961-07 | 562.265915 | 681.734085 |

| 1961-08 | 546.265915 | 665.734085 |

| 1961-09 | 448.265915 | 567.734085 |

| 1961-10 | 401.265915 | 520.734085 |

| 1961-11 | 330.265915 | 449.734085 |

| 1961-12 | 372.265915 | 491.734085 |

The return y_pred_ints is a pandas.DataFrame with a column multi-index: The first level is variable name from y in fit (or Coverage if no variable names were present), second level coverage fractions for which intervals were computed, in the same order as in input coverage; third level columns lower and upper. Rows are the indices for which forecasts were made (same as in y_pred or fh). Entries are lower/upper (as column name) bound of the nominal coverage

predictive interval for the index in the same row.

Pretty-plotting the predictive interval forecasts:

[30]:

from aeon.visualisation import plot_series

# also requires predictions

y_pred = forecaster.predict()

fig, ax = plot_series(y, y_pred, labels=["y", "y_pred"], pred_interval=y_pred_ints)

predict_quantiles - quantile forecasts¶

aeon offers predict_quantiles as a unified interface to return quantile values of predictions. Similar to predict_interval.

predict_quantiles has an argument alpha, containing the quantile values being queried. Similar to the case of the predict_interval, alpha can be a float, or a list of floats.

[31]:

y_pred_quantiles = forecaster.predict_quantiles(alpha=[0.275, 0.975])

y_pred_quantiles

[31]:

| Quantiles | ||

|---|---|---|

| 0.275 | 0.975 | |

| 1961-01 | 395.291896 | 488.177552 |

| 1961-02 | 369.291896 | 462.177552 |

| 1961-03 | 397.291896 | 490.177552 |

| 1961-04 | 439.291896 | 532.177552 |

| 1961-05 | 450.291896 | 543.177552 |

| 1961-06 | 513.291896 | 606.177552 |

| 1961-07 | 600.291896 | 693.177552 |

| 1961-08 | 584.291896 | 677.177552 |

| 1961-09 | 486.291896 | 579.177552 |

| 1961-10 | 439.291896 | 532.177552 |

| 1961-11 | 368.291896 | 461.177552 |

| 1961-12 | 410.291896 | 503.177552 |

y_pred_quantiles, the output of predict_quantiles, is a pandas.DataFrame with a two-level column multiindex. The first level is variable name from y in fit (or Quantiles if no variable names were present), second level are the quantile values (from alpha) for which quantile predictions were queried. Rows are the indices for which forecasts were made (same as in y_pred or fh). Entries are the quantile predictions for that variable, that quantile value, for the time

index in the same row.

Remark: for clarity: quantile and (symmetric) interval forecasts can be translated into each other as follows.

alpha < 0.5: The alpha-quantile prediction is equal to the lower bound of a predictive interval with coverage = (0.5 - alpha) * 2

alpha > 0.5: The alpha-quantile prediction is equal to the upper bound of a predictive interval with coverage = (alpha - 0.5) * 2

predict_var - variance predictions¶

predict_var produces variance predictions:

[32]:

y_pred_var = forecaster.predict_var()

y_pred_var

[32]:

| 0 | |

|---|---|

| 1961-01 | 1318.833333 |

| 1961-02 | 1318.833333 |

| 1961-03 | 1318.833333 |

| 1961-04 | 1318.833333 |

| 1961-05 | 1318.833333 |

| 1961-06 | 1318.833333 |

| 1961-07 | 1318.833333 |

| 1961-08 | 1318.833333 |

| 1961-09 | 1318.833333 |

| 1961-10 | 1318.833333 |

| 1961-11 | 1318.833333 |

| 1961-12 | 1318.833333 |

The format of the output y_pred_var is the same as for predict, except that this is always coerced to a pandas.DataFrame, and entries are not point predictions but variance predictions.

predict_proba - distribution predictions¶

To predict full predictive distributions, predict_proba can be used. As this returns tensorflow Distribution objects, the deep learning dependency set dl of aeon (which includes tensorflow and tensorflow-probability dependencies) must be installed.

[33]:

y_pred_proba = forecaster.predict_proba()

y_pred_proba

[33]:

<tfp.distributions.Normal 'Normal' batch_shape=[12, 1] event_shape=[] dtype=float32>

Distributions returned by predict_proba are by default marginal at time points, not joint over time points. More precisely, the returned Distribution object is formatted and to be interpreted as follows: * Batch shape is 1D and same length as fh * Event shape is 1D, with length equal to number of variables being forecast * i-th (batch) distribution is forecast for i-th entry of fh * j-th (event) component is j-th variable, same order as y in fit/update

To return joint forecast distributions, the marginal parameter can be set to False (currently work in progress). In this case, a Distribution with 2D event shape (len(fh), len(y)) is returned.

1.2.6 Panel forecasts and hierarchical forecasts¶

aeon provides a unified interface to make panel and hierarchical forecasts.

All aeon forecasters can be applied to panel and hierarchical data, which needs to be presented in specific input formats. Forecasters that are not genuinely panel or hierarchical forecasters will be applied by instance.

The recommended (not the only) format to pass panel and hierarchical data is a pandas.DataFrame with MultiIndex row. In this MultiIndex, the last level must be in an aeon compatible time index format, the remaining levels are panel or hierarchy nodes.

Example data:

[34]:

from aeon.testing.utils.data_gen import _bottom_hier_datagen

y = _bottom_hier_datagen(no_levels=2)

y

[34]:

| passengers | |||

|---|---|---|---|

| l2_agg | l1_agg | timepoints | |

| l2_node01 | l1_node02 | 1949-01 | 52.943320 |

| 1949-02 | 54.874511 | ||

| 1949-03 | 59.379565 | ||

| 1949-04 | 58.414313 | ||

| 1949-05 | 55.840000 | ||

| ... | ... | ... | ... |

| l2_node03 | l1_node05 | 1960-08 | 2221.886565 |

| 1960-09 | 1801.134311 | ||

| 1960-10 | 1606.045386 | ||

| 1960-11 | 1320.202973 | ||

| 1960-12 | 1487.967083 |

864 rows × 1 columns

As stated, all forecasters, genuinely hierarchical or not, can be applied, with all workflows described in this section, to produce hierarchical forecasts.

The syntax is exactly the same as for plain time series, except for the hierarchy levels in input and output data:

[35]:

from aeon.forecasting.arima import ARIMA

fh = [1, 2, 3]

forecaster = ARIMA()

forecaster.fit(y, fh=fh)

forecaster.predict()

[35]:

| passengers | |||

|---|---|---|---|

| l2_agg | l1_agg | timepoints | |

| l2_node01 | l1_node02 | 1961-01 | 154.000381 |

| 1961-02 | 152.311358 | ||

| 1961-03 | 150.682121 | ||

| l1_node03 | 1961-01 | 2805.041069 | |

| 1961-02 | 2770.577861 | ||

| 1961-03 | 2737.335536 | ||

| l2_node02 | l1_node01 | 1961-01 | 426.544850 |

| 1961-02 | 421.282983 | ||

| 1961-03 | 416.207550 | ||

| l1_node04 | 1961-01 | 892.307468 | |

| 1961-02 | 879.432170 | ||

| 1961-03 | 867.062017 | ||

| l1_node06 | 1961-01 | 3237.664605 | |

| 1961-02 | 3192.489057 | ||

| 1961-03 | 3149.040796 | ||

| l2_node03 | l1_node05 | 1961-01 | 1465.123534 |

| 1961-02 | 1443.194107 | ||

| 1961-03 | 1422.142219 |

Similar to multivariate forecasting, forecasters that are not genuinely hierarchical fit by instance. The forecasters fitted by instance can be accessed in the forecasters_ parameter, which is a pandas.DataFrame where forecasters for a given instance are placed in the row with the index of the instance for which they make forecasts:

[36]:

forecaster.forecasters_

[36]:

| forecasters | ||

|---|---|---|

| l2_agg | l1_agg | |

| l2_node01 | l1_node02 | ARIMA() |

| l1_node03 | ARIMA() | |

| l2_node02 | l1_node01 | ARIMA() |

| l1_node04 | ARIMA() | |

| l1_node06 | ARIMA() | |

| l2_node03 | l1_node05 | ARIMA() |

If the data is both hierarchical and multivariate, and the forecaster cannot genuinely deal with either, the forecasters_ attribute will have both column indices, for variables, and row indices, for instances, with forecasters fitted per instance and variable:

[ ]:

from aeon.forecasting.arima import ARIMA

from aeon.testing.utils.data_gen import _make_hierarchical

y = _make_hierarchical(n_columns=2)

fh = [1, 2, 3]

forecaster = ARIMA()

forecaster.fit(y, fh=fh)

forecaster.forecasters_

Further details on hierarchical forecasting, including reduction, aggregation, reconciliation, are presented in the “hierarchical forecasting” tutorial.

1.3 Basic evaluation workflow - evaluating a batch of forecasts against ground truth observations¶

It is good practice to evaluate statistical performance of a forecaster before deploying it, and regularly re-evaluate performance if in continuous deployment. The evaluation workflow for the basic batch forecasting task, as solved by the workflow in Section 1.2, consists of comparing batch forecasts with actuals. This is sometimes called (batch-wise) backtesting.

The basic evaluation workflow is as follows:

Splitting a representatively chosen historical series into a temporal training and test set. The test set should be temporally in the future of the training set.

Obtaining batch forecasts, as in Section 1.2, by fitting a forecaster to the training set, and querying predictions for the test set

Specifying a quantitative performance metric to compare the actual test set against predictions

Computing the quantitative performance on the test set

Testing whether this performance is statistically better than a chosen baseline performance

NOTE: Step 5 (testing) is currently not supported in aeon, but is on the development roadmap. For the time being, it is advised to use custom implementations of appropriate methods (e.g., Diebold-Mariano test; stationary confidence intervals).

NOTE: Note that this evaluation set-up determines how well a given algorithm would have performed on past data. Results are only insofar representative as future performance can be assumed to mirror past performance. This can be argued under certain assumptions (e.g., stationarity), but will in general be false. Monitoring of forecasting performance is hence advised in case an algorithm is applied multiple times.

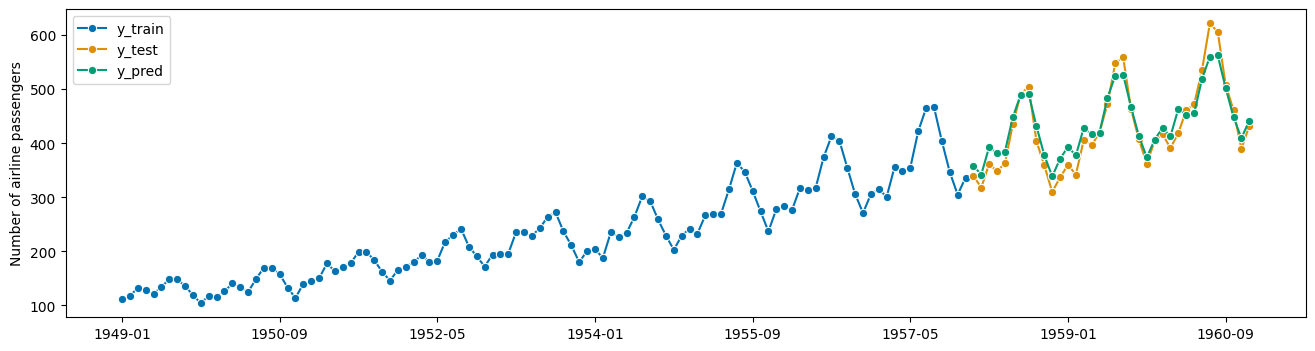

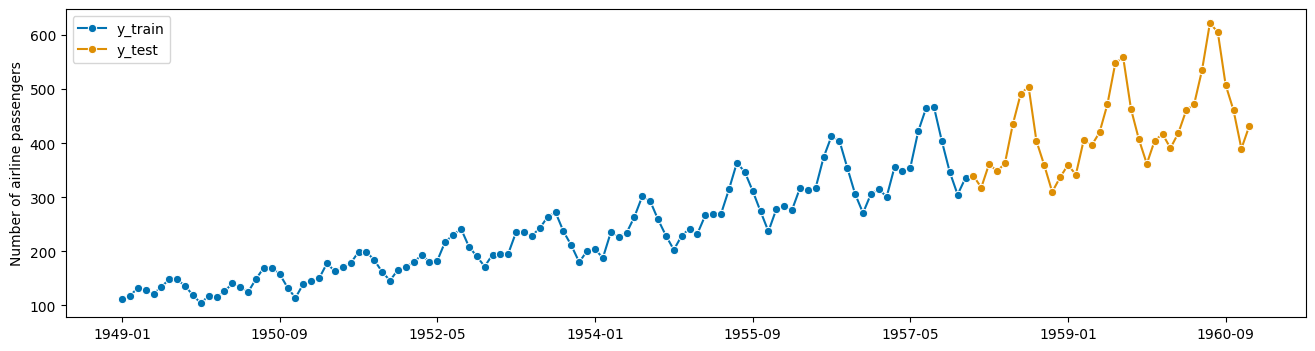

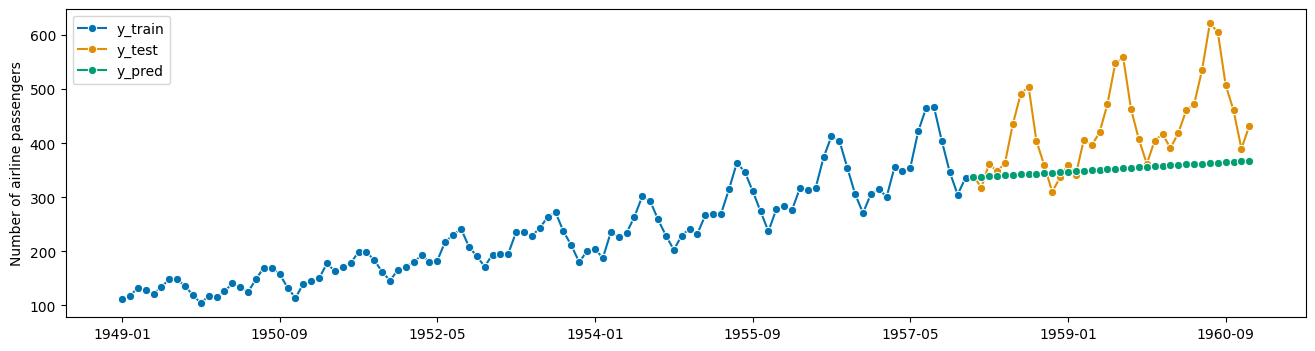

Example: In the example, we will us the same airline data as in Section 1.2. But, instead of predicting the next 3 years, we hold out the last 3 years of the airline data (below: y_test), and see how the forecaster would have performed three years ago, when asked to forecast the most recent 3 years (below: y_pred), from the years before (below: y_train). “how” is measured by a quantitative performance metric (below: mean_absolute_percentage_error). This is then considered as

an indication of how well the forecaster would perform in the coming 3 years (what was done in Section 1.2). This may or may not be a stretch depending on statistical assumptions and data properties (caution: it often is a stretch - past performance is in general not indicative of future performance).

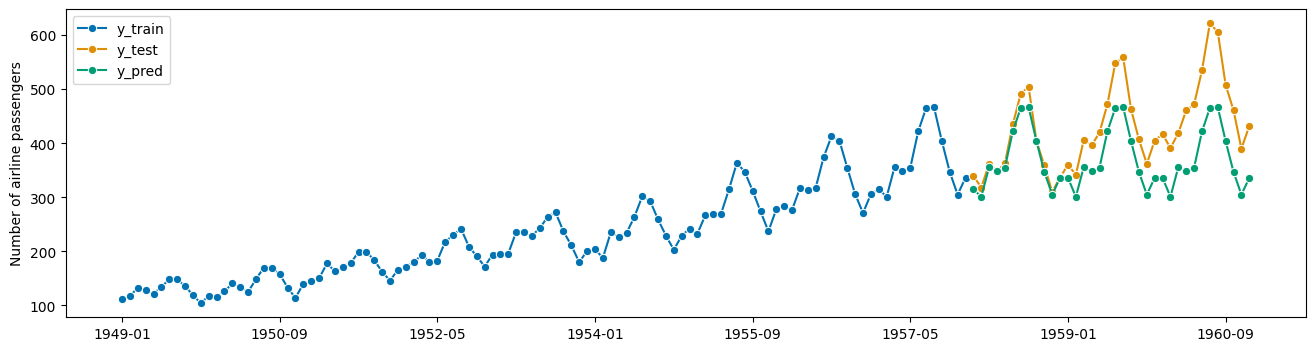

Step 1 - Splitting a historical data set in to a temporal train and test batch¶

[38]:

from aeon.forecasting.model_selection import temporal_train_test_split

[39]:

y = load_airline()

y_train, y_test = temporal_train_test_split(y, test_size=36)

# we will try to forecast y_test from y_train

[40]:

# plotting for illustration

plot_series(y_train, y_test, labels=["y_train", "y_test"])

print(y_train.shape[0], y_test.shape[0])

108 36

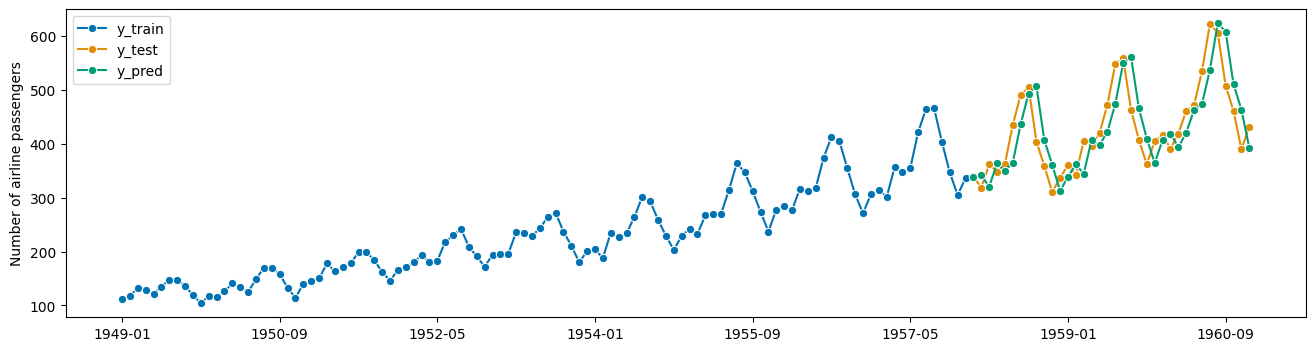

Step 2 - Making forecasts for y_test from y_train¶

This is almost verbatim the workflow in Section 1.2, using y_train to predict the indices of y_test.

[41]:

# we can simply take the indices from `y_test` where they already are stored

fh = ForecastingHorizon(y_test.index, is_relative=False)

forecaster = NaiveForecaster(strategy="last", sp=12)

forecaster.fit(y_train)

# y_pred will contain the predictions

y_pred = forecaster.predict(fh)

[42]:

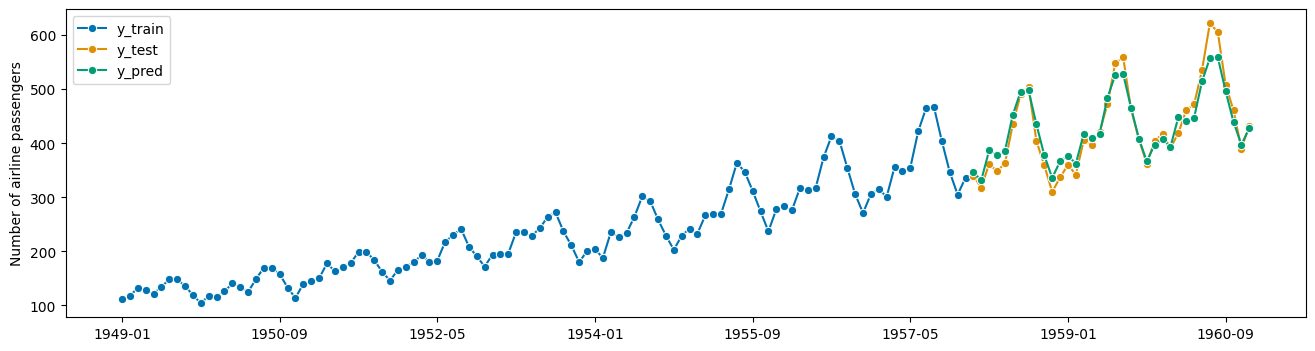

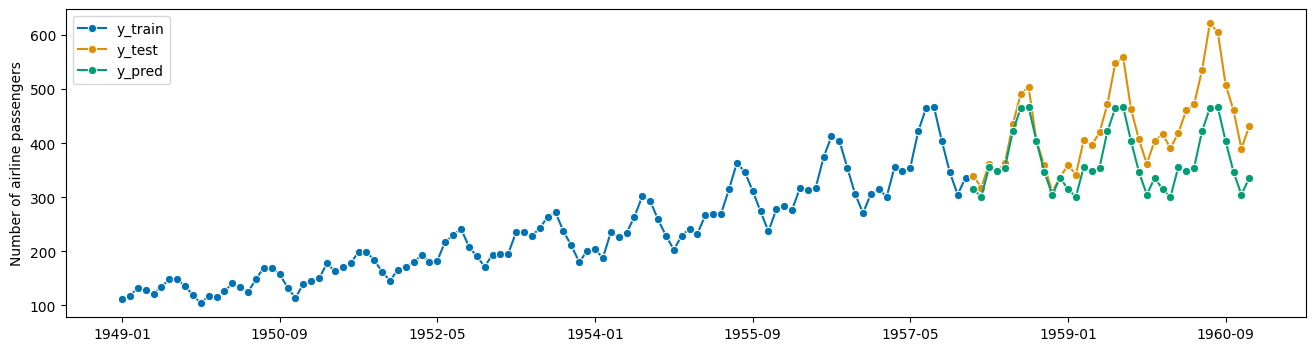

# plotting for illustration

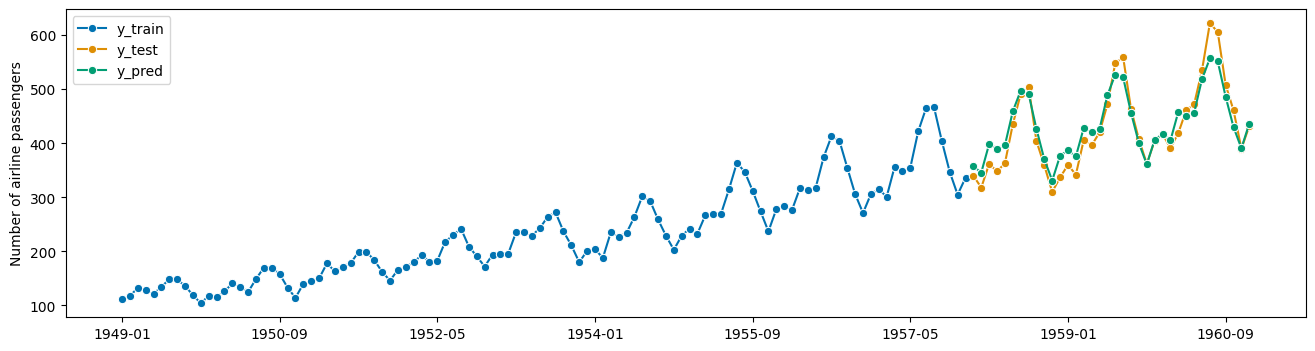

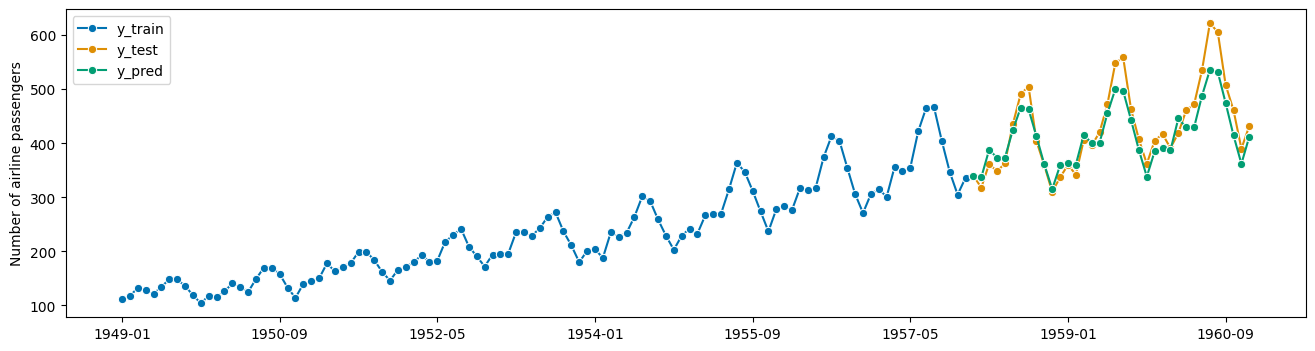

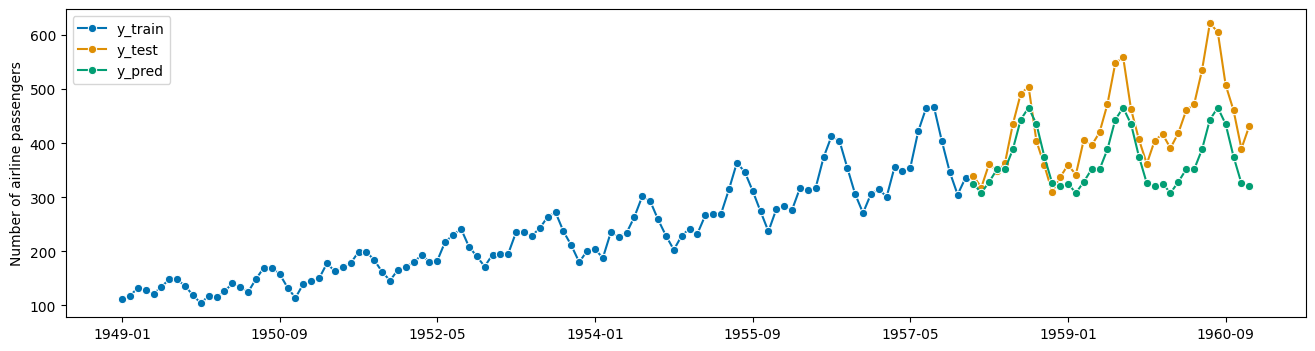

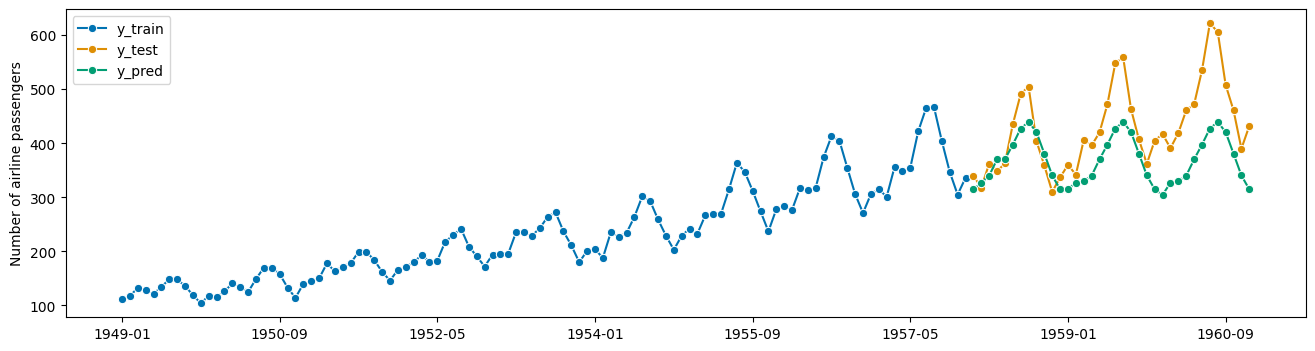

plot_series(y_train, y_test, y_pred, labels=["y_train", "y_test", "y_pred"])

[42]:

(<Figure size 1600x400 with 1 Axes>,

<Axes: ylabel='Number of airline passengers'>)

Steps 3 and 4 - Specifying a forecasting metric, evaluating on the test set¶

The next step is to specify a forecasting metric. These are functions that return a number when input with prediction and actual series. They are different from sklearn metrics in that they can accept series with indices rather than np .ndarray. Forecasting metrics can be invoked using the functions in aeon.benchmarking .forecasting e.g.,mean_absolute_percentage_error or mean_squared_error.

[43]:

from aeon.performance_metrics.forecasting import mean_absolute_percentage_error

[44]:

# option 1: using the lean function interface

mean_absolute_percentage_error(y_test, y_pred, symmetric=False)

# note: the FIRST argument is the ground truth, the SECOND argument are the forecasts

# the order matters for most metrics in general

[44]:

0.13189432350948402

Step 5 - Testing performance against benchmarks¶

In general, forecast performances should be quantitatively tested against benchmark performances.

1.3.1 The basic batch forecast evaluation workflow in a nutshell - function metric interface¶

For convenience, we present the basic batch forecast evaluation workflow in one cell. This cell is using the lean function metric interface.

[45]:

from aeon.datasets import load_airline

from aeon.forecasting.base import ForecastingHorizon

from aeon.forecasting.model_selection import temporal_train_test_split

from aeon.forecasting.naive import NaiveForecaster

from aeon.performance_metrics.forecasting import mean_absolute_percentage_error

[46]:

# step 1: splitting historical data

y = load_airline()

y_train, y_test = temporal_train_test_split(y, test_size=36)

# step 2: running the basic forecasting workflow

fh = ForecastingHorizon(y_test.index, is_relative=False)

forecaster = NaiveForecaster(strategy="last", sp=12)

forecaster.fit(y_train)

y_pred = forecaster.predict(fh)

# step 3: specifying the evaluation metric and

# step 4: computing the forecast performance

mean_absolute_percentage_error(y_test, y_pred, symmetric=False)

# step 5: testing forecast performance against baseline

# under development

[46]:

0.13189432350948402

1.3.2 The basic batch forecast evaluation workflow in a nutshell - metric class interface¶

For convenience, we present the basic batch forecast evaluation workflow in one cell. This cell is using the advanced class specification interface for metrics.

[47]:

from aeon.datasets import load_airline

from aeon.forecasting.base import ForecastingHorizon

from aeon.forecasting.model_selection import temporal_train_test_split

from aeon.forecasting.naive import NaiveForecaster

from aeon.performance_metrics.forecasting import mean_absolute_percentage_error

[1]:

# step 1: splitting historical data

y = load_airline()

y_train, y_test = temporal_train_test_split(y, test_size=36)

# step 2: running the basic forecasting workflow

fh = ForecastingHorizon(y_test.index, is_relative=False)

forecaster = NaiveForecaster(strategy="last", sp=12)

forecaster.fit(y_train)

y_pred = forecaster.predict(fh)

# step 3: computing the forecast performance for a given metric

mean_absolute_percentage_error(y_test, y_pred, symmetric=False)

# step 5: testing forecast performance against baseline

# under development

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

Cell In[1], line 2

1 # step 1: splitting historical data

----> 2 y = load_airline()

3 y_train, y_test = temporal_train_test_split(y, test_size=36)

5 # step 2: running the basic forecasting workflow

NameError: name 'load_airline' is not defined

1.4 Advanced deployment workflow: rolling updates & forecasts¶

A common use case requires the forecaster to regularly update with new data and make forecasts on a rolling basis. This is especially useful if the same kind of forecast has to be made at regular time points, e.g., daily or weekly. aeon forecasters support this type of deployment workflow via the update and update_predict methods.

1.4.1 Updating a forecaster with the update method¶

The update method can be called when a forecaster is already fitted, to ingest new data and make updated forecasts - this is referred to as an “update step”.

After the update, the forecaster’s internal “now” state (the cutoff) is set to the latest time stamp seen in the update batch (assumed to be later than previously seen data).

The general pattern is as follows:

Specify a forecasting strategy

Specify a relative forecasting horizon

Fit the forecaster to an initial batch of data using

fitMake forecasts for the relative forecasting horizon, using

predictObtain new data; use

updateto ingest new dataMake forecasts using

predictfor the updated dataRepeat 5 and 6 as often as required

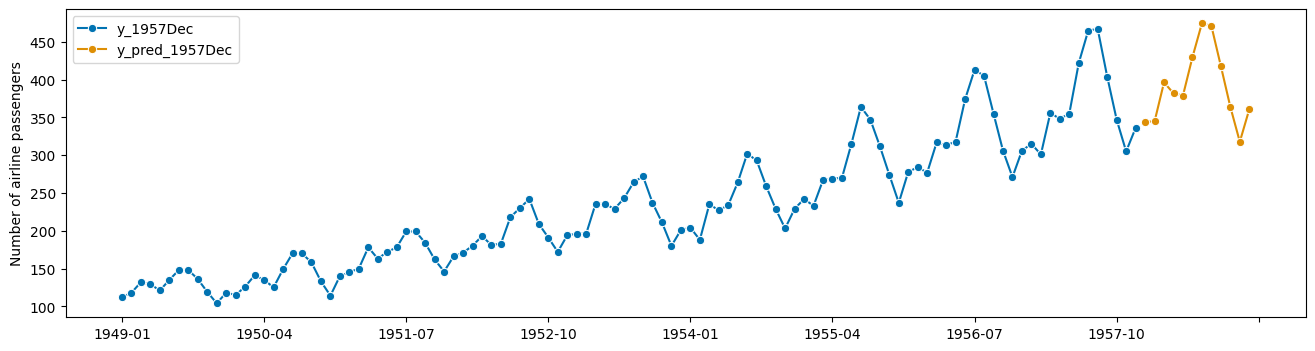

Example: suppose that, in the airline example, we want to make forecasts a year ahead, but every month, starting December 1957. The first few months, forecasts would be made as follows:

[49]:

from aeon.datasets import load_airline

from aeon.forecasting.ets import AutoETS

from aeon.visualisation import plot_series

[50]:

# we prepare the full data set for convenience

# note that in the scenario we will "know" only part of this at certain time points

y = load_airline()

[51]:

# December 1957

# this is the data known in December 1957

y_1957Dec = y[:-36]

# step 1: specifying the forecasting strategy

forecaster = AutoETS(auto=True, sp=12, n_jobs=-1)

# step 2: specifying the forecasting horizon: one year ahead, all months

fh = np.arange(1, 13)

# step 3: this is the first time we use the model, so we fit it

forecaster.fit(y_1957Dec)

# step 4: obtaining the first batch of forecasts for Jan 1958 - Dec 1958

y_pred_1957Dec = forecaster.predict(fh)

[52]:

# plotting predictions and past data

plot_series(y_1957Dec, y_pred_1957Dec, labels=["y_1957Dec", "y_pred_1957Dec"])

[52]:

(<Figure size 1600x400 with 1 Axes>,

<Axes: ylabel='Number of airline passengers'>)

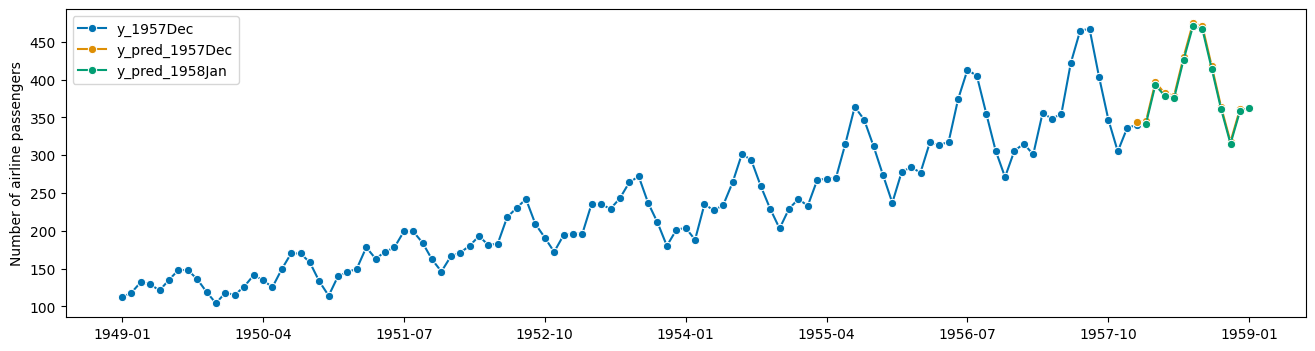

[53]:

# January 1958

# new data is observed:

y_1958Jan = y[[-36]]

# step 5: we update the forecaster with the new data

forecaster.update(y_1958Jan)

# step 6: making forecasts with the updated data

y_pred_1958Jan = forecaster.predict(fh)

[54]:

# note that the fh is relative, so forecasts are automatically for 1 month later

# i.e., from Feb 1958 to Jan 1959

y_pred_1958Jan

[54]:

1958-02 341.515812

1958-03 392.849891

1958-04 378.521584

1958-05 375.662009

1958-06 426.011081

1958-07 470.577777

1958-08 467.110706

1958-09 414.461454

1958-10 360.966279

1958-11 315.212373

1958-12 357.909015

1959-01 363.046383

Freq: M, dtype: float64

[55]:

# plotting predictions and past data

plot_series(

y[:-35],

y_pred_1957Dec,

y_pred_1958Jan,

labels=["y_1957Dec", "y_pred_1957Dec", "y_pred_1958Jan"],

)

[55]:

(<Figure size 1600x400 with 1 Axes>,

<Axes: ylabel='Number of airline passengers'>)

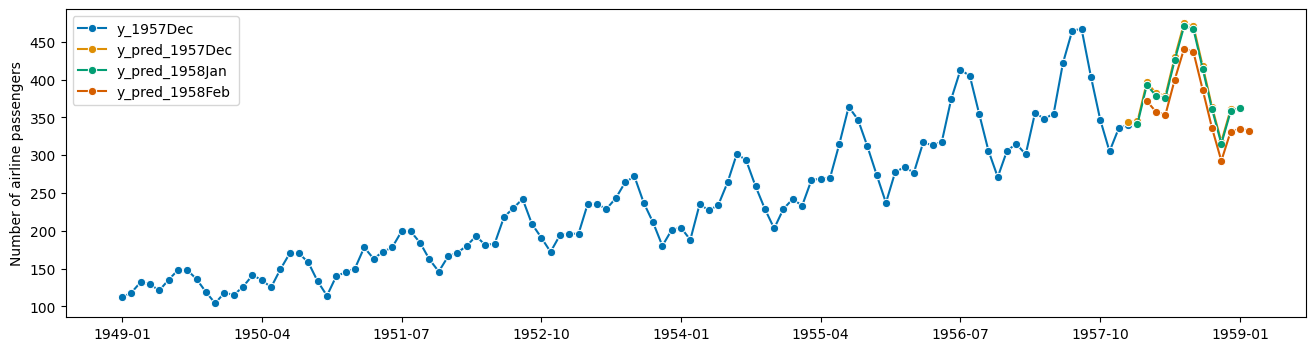

[56]:

# February 1958

# new data is observed:

y_1958Feb = y[[-35]]

# step 5: we update the forecaster with the new data

forecaster.update(y_1958Feb)

# step 6: making forecasts with the updated data

y_pred_1958Feb = forecaster.predict(fh)

[57]:

# plotting predictions and past data

plot_series(

y[:-35],

y_pred_1957Dec,

y_pred_1958Jan,

y_pred_1958Feb,

labels=["y_1957Dec", "y_pred_1957Dec", "y_pred_1958Jan", "y_pred_1958Feb"],

)

[57]:

(<Figure size 1600x400 with 1 Axes>,

<Axes: ylabel='Number of airline passengers'>)

… and so on.

A shorthand for running first update and then predict is update_predict_single - for some algorithms, this may be more efficient than the separate calls to update and predict:

[58]:

# March 1958

# new data is observed:

y_1958Mar = y[[-34]]

# step 5&6: update/predict in one step

forecaster.update_predict_single(y_1958Mar, fh=fh)

[58]:

1958-04 349.166352

1958-05 346.925002

1958-06 394.064152

1958-07 435.855204

1958-08 433.331082

1958-09 384.856436

1958-10 335.547256

1958-11 293.184412

1958-12 333.288639

1959-01 338.608873

1959-02 336.996809

1959-03 388.137360

Freq: M, dtype: float64

1.4.2 Moving the “now” state without updating the model¶

In the rolling deployment mode, may be useful to move the estimator’s “now” state (the cutoff) to later, for example if no new data was observed, but time has progressed; or, if computations take too long, and forecasts have to be queried.

The update interface provides an option for this, via the update_params argument of update and other update funtions.

If update_params is set to False, no model update computations are performed; only data is stored, and the internal “now” state (the cutoff) is set to the most recent date.

[59]:

# April 1958

# new data is observed:

y_1958Apr = y[[-33]]

# step 5: perform an update without re-computing the model parameters

forecaster.update(y_1958Apr, update_params=False)

[59]:

AutoETS(auto=True, n_jobs=-1, sp=12)In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

AutoETS(auto=True, n_jobs=-1, sp=12)

1.4.3 Walk-forward predictions on a batch of data¶

aeon can also simulate the update/predict deployment mode with a full batch of data.

This is not useful in deployment, as it requires all data to be available in advance; however, it is useful in playback, such as for simulations or model evaluation.

The update/predict playback mode can be called using update_predict and a re-sampling constructor which encodes the precise walk-forward scheme.

[60]:

# from aeon.datasets import load_airline

# from aeon.forecasting.ets import AutoETS

# from aeon.forecasting.model_selection import ExpandingWindowSplitter

# from aeon.visualisation import plot_series

NOTE: commented out - this part of the interface is currently undergoing a re-work. Contributions and PR are appreciated.

[61]:

# for playback, the full data needs to be loaded in advance

# y = load_airline()

[62]:

# step 1: specifying the forecasting strategy

# forecaster = AutoETS(auto=True, sp=12, n_jobs=-1)

# step 2: specifying the forecasting horizon

# fh - np.arange(1, 13)

# step 3: specifying the cross-validation scheme

# cv = ExpandingWindowSplitter()

# step 4: fitting the forecaster - fh should be passed here

# forecaster.fit(y[:-36], fh=fh)

# step 5: rollback

# y_preds = forecaster.update_predict(y, cv)

1.5 Advanced evaluation worfklow: rolling re-sampling and aggregate errors, rolling back-testing¶

To evaluate forecasters with respect to their performance in rolling forecasting, the forecaster needs to be tested in a set-up mimicking rolling forecasting, usually on past data. Note that the batch back-testing as in Section 1.3 would not be an appropriate evaluation set-up for rolling deployment, as that tests only a single forecast batch.

The advanced evaluation workflow can be carried out using the evaluate benchmarking function. evalute takes as arguments: - a forecaster to be evaluated - a scikit-learn re-sampling strategy for temporal splitting (cv below), e.g., ExpandingWindowSplitter or SlidingWindowSplitter - a strategy (string): whether the forecaster should be always be refitted or just fitted once and then updated

[63]:

from aeon.forecasting.model_evaluation import evaluate

from aeon.forecasting.model_selection import ExpandingWindowSplitter

from aeon.forecasting.theta import ThetaForecaster

[64]:

forecaster = ThetaForecaster(sp=12)

cv = ExpandingWindowSplitter(

step_length=12, fh=[1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12], initial_window=72

)

df = evaluate(forecaster=forecaster, y=y, cv=cv, strategy="refit", return_data=True)

df.iloc[:, :5]

[64]:

| test_mean_absolute_percentage_error | fit_time | pred_time | len_train_window | cutoff | |

|---|---|---|---|---|---|

| 0 | 0.083537 | 0.014647 | 0.005874 | 72 | 1954-12 |

| 1 | 0.047574 | 0.008137 | 0.005779 | 84 | 1955-12 |

| 2 | 0.059412 | 0.008143 | 0.005170 | 96 | 1956-12 |

| 3 | 0.040142 | 0.007612 | 0.005146 | 108 | 1957-12 |

| 4 | 0.101327 | 0.007556 | 0.005259 | 120 | 1958-12 |

| 5 | 0.051581 | 0.007802 | 0.005211 | 132 | 1959-12 |

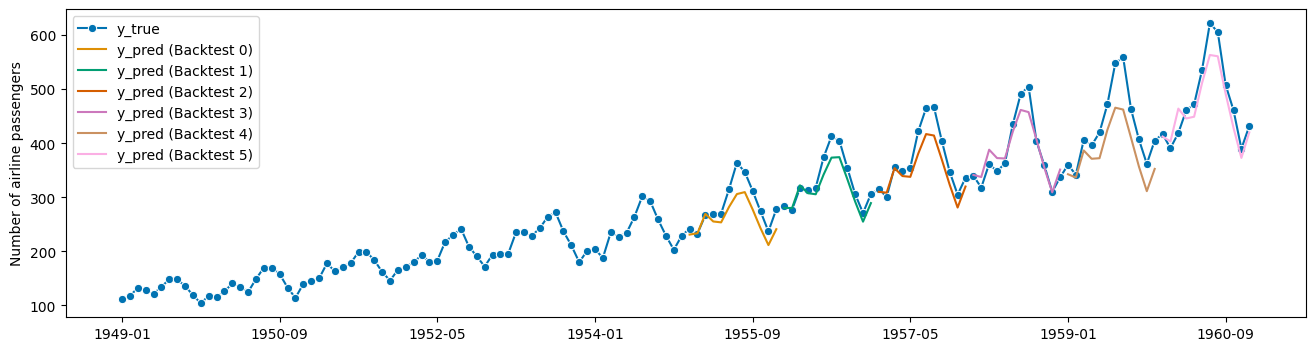

[65]:

# visualization of a forecaster evaluation

fig, ax = plot_series(

y,

df["y_pred"].iloc[0],

df["y_pred"].iloc[1],

df["y_pred"].iloc[2],

df["y_pred"].iloc[3],

df["y_pred"].iloc[4],

df["y_pred"].iloc[5],

markers=["o", "", "", "", "", "", ""],

labels=["y_true"] + ["y_pred (Backtest " + str(x) + ")" for x in range(6)],

)

ax.legend()

[65]:

<matplotlib.legend.Legend at 0x29e2d1f9550>

Todo: performance metrics, averages, and testing - contributions to aeon and the tutorial are welcome.

3. Advanced composition patterns - pipelines, reduction, autoML, and more¶

aeon supports a number of advanced composition patterns to create forecasters out of simpler components:

Reduction - building a forecaster from estimators of “simpler” scientific types, like

scikit-learnregressors. A common example is feature/label tabulation by rolling window, aka the “direct reduction strategy”.Tuning - determining values for hyper-parameters of a forecaster in a data-driven manner. A common example is grid search on temporally rolling re-sampling of train/test splits.

Pipelining - concatenating transformers with a forecaster to obtain one forecaster. A common example is detrending and deseasonalizing then forecasting, an instance of this is the common “STL forecaster”.

AutoML, also known as automated model selection - using automated tuning strategies to select not only hyper-parameters but entire forecasting strategies. A common example is on-line multiplexer tuning.

For illustration, all estimators below will be presented on the basic forecasting workflow - though they also support the advanced forecasting and evaluation workflows under the unified aeon interface (see Section 1).

For use in the other workflows, simply replace the “forecaster specification block” (”forecaster=”) by the forecaster specification block in the examples presented below.

[91]:

# imports necessary for this chapter

from aeon.datasets import load_airline

from aeon.forecasting.base import ForecastingHorizon

from aeon.forecasting.model_selection import temporal_train_test_split

from aeon.performance_metrics.forecasting import mean_absolute_percentage_error

from aeon.visualisation import plot_series

# data loading for illustration (see section 1 for explanation)

y = load_airline()

y_train, y_test = temporal_train_test_split(y, test_size=36)

fh = ForecastingHorizon(y_test.index, is_relative=False)

3.1 Reduction: from forecasting to regression¶

aeon provides a meta-estimator that allows the use of any scikit-learn estimator for forecasting.

modular and compatible with scikit-learn, so that we can easily apply any scikit-learn regressor to solve our forecasting problem,

parametric and tuneable, allowing us to tune hyper-parameters such as the window length or strategy to generate forecasts

adaptive, in the sense that it adapts the scikit-learn’s estimator interface to that of a forecaster, making sure that we can tune and properly evaluate our model

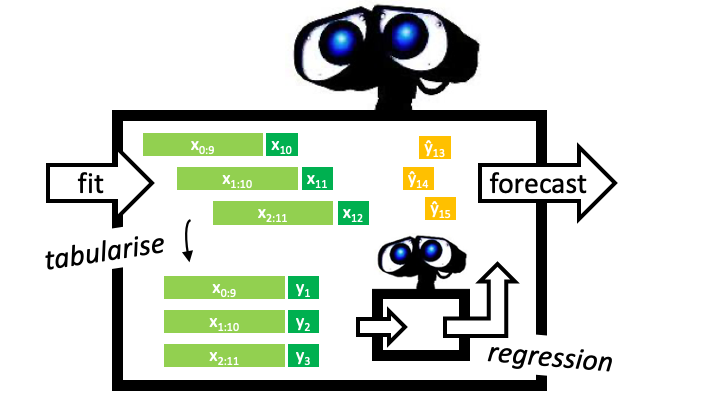

Example: we will define a tabulation reduction strategy to convert a k-nearest neighbors regressor (sklearn KNeighborsRegressor) into a forecaster. The composite algorithm is an object compliant with the aeon forecaster interface (picture: big robot), and contains the regressor as a parameter accessible component (picture: little robot). In fit, the composite algorithm uses a sliding window strategy to tabulate the data, and fit the regressor to the tabulated data (picture:

left half). In predict, the composite algorithm presents the regressor with the last observed window to obtain predictions (picture: right half).

Below, the composite is constructed using the shorthand function make_reduction which produces a aeon estimator of forecaster scitype. It is called with a constructed scikit-learn regressor, regressor, and additional parameter which can be later tuned as hyper-parameters

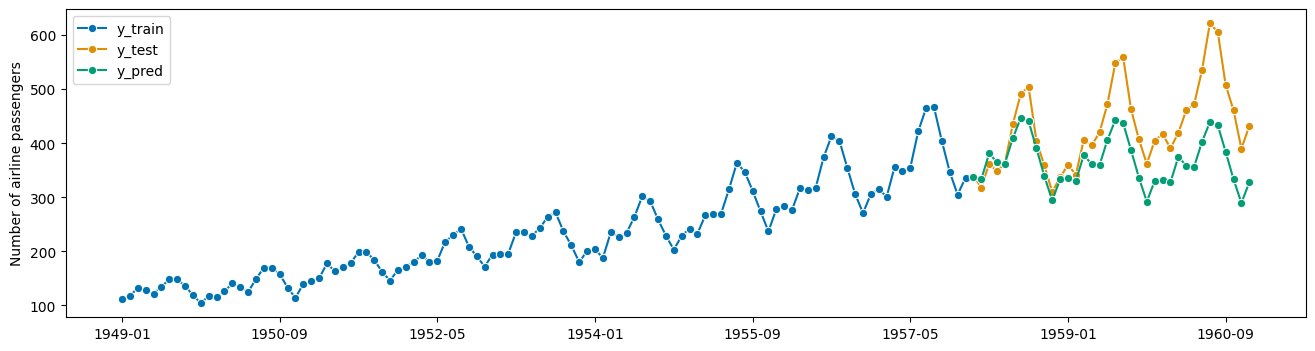

[139]:

from sklearn.neighbors import KNeighborsRegressor

from aeon.forecasting.compose import make_reduction

[140]:

regressor = KNeighborsRegressor(n_neighbors=1)

forecaster = make_reduction(regressor, window_length=15, strategy="recursive")

[141]:

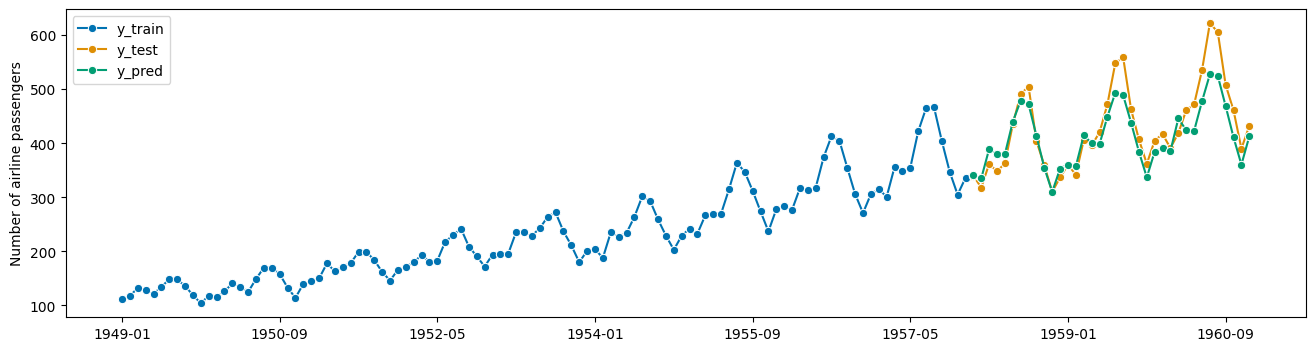

forecaster.fit(y_train)

y_pred = forecaster.predict(fh)

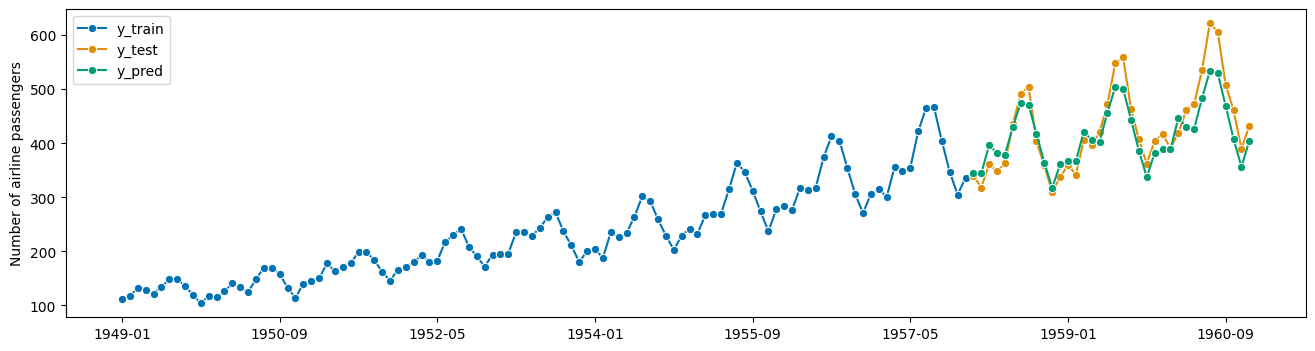

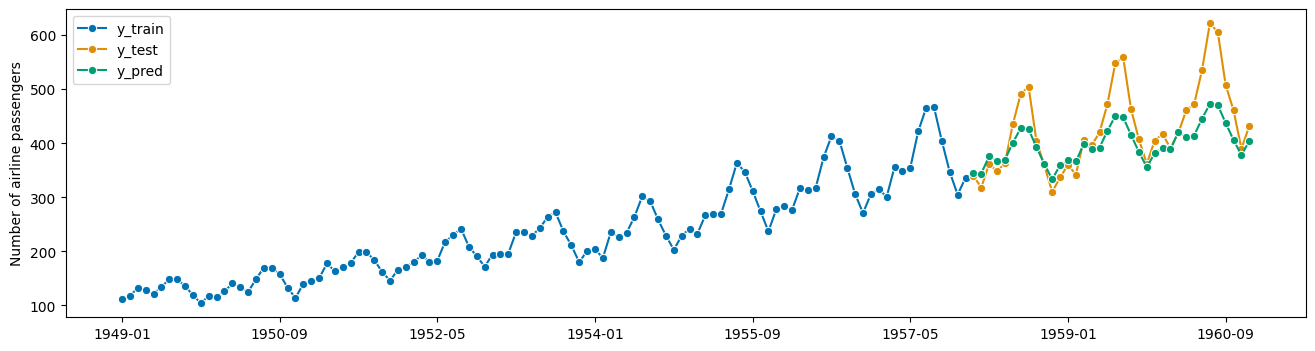

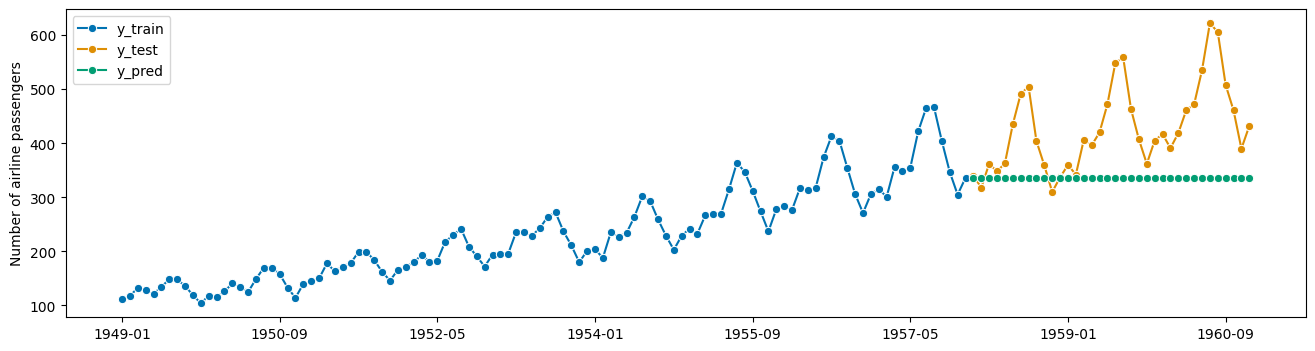

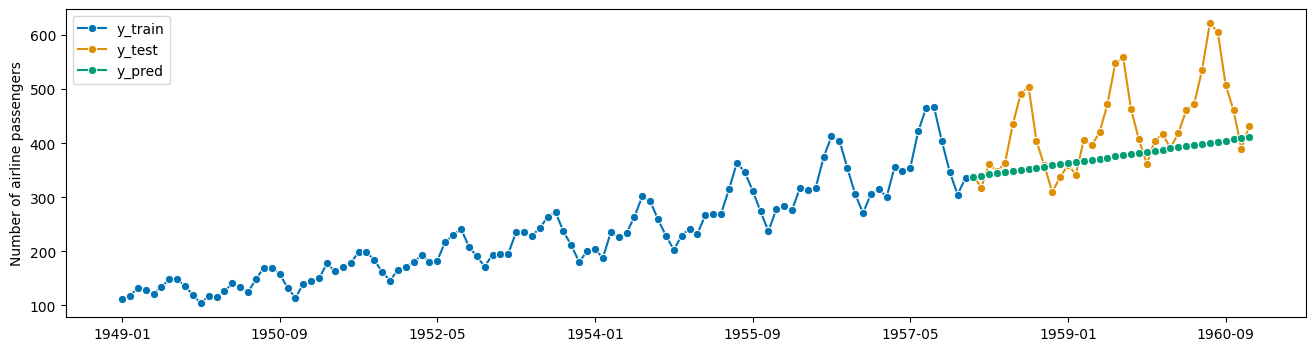

plot_series(y_train, y_test, y_pred, labels=["y_train", "y_test", "y_pred"])

mean_absolute_percentage_error(y_test, y_pred, symmetric=False)

[141]:

0.12887507224382988

In the above example we use the “recursive” reduction strategy. Other implemented strategies are: * “direct”, * “dirrec”, * “multioutput”.

Parameters can be inspected using scikit-learn compatible get_params functionality (and set using set_params). This provides tunable and nested access to parameters of the KNeighborsRegressor (as estimator_etc), and the window_length of the reduction strategy. Note that the strategy is not accessible, as underneath the utility function this is mapped on separate algorithm classes. For tuning over algorithms, see the “autoML” section below.

[95]:

forecaster.get_params()

[95]:

{'estimator__algorithm': 'auto',

'estimator__leaf_size': 30,

'estimator__metric': 'minkowski',

'estimator__metric_params': None,

'estimator__n_jobs': None,

'estimator__n_neighbors': 1,

'estimator__p': 2,

'estimator__weights': 'uniform',

'estimator': KNeighborsRegressor(n_neighbors=1),

'pooling': 'local',

'transformers': None,

'window_length': 15}

3.2 Pipelining, detrending and deseasonalization¶

A common composition motif is pipelining: for example, first deseasonalizing or detrending the data, then forecasting the detrended/deseasonalized series. When forecasting, one needs to add the trend and seasonal component back to the data.

3.2.1 The basic forecasting pipeline¶

aeon provides a generic pipeline object for this kind of composite modelling, the TransforemdTargetForecaster. It chains an arbitrary number of transformations with a forecaster. The transformations can either be pre-processing transformations or a post-processing transformations. An example of a forecaster with pre-processing transformations can be seen below.

[96]:

from aeon.forecasting.arima import ARIMA

from aeon.forecasting.compose import TransformedTargetForecaster

from aeon.transformations.detrend import Deseasonalizer

[97]:

forecaster = TransformedTargetForecaster(

[

("deseasonalize", Deseasonalizer(model="multiplicative", sp=12)),

("forecast", ARIMA()),

]

)

forecaster.fit(y_train)

y_pred = forecaster.predict(fh)

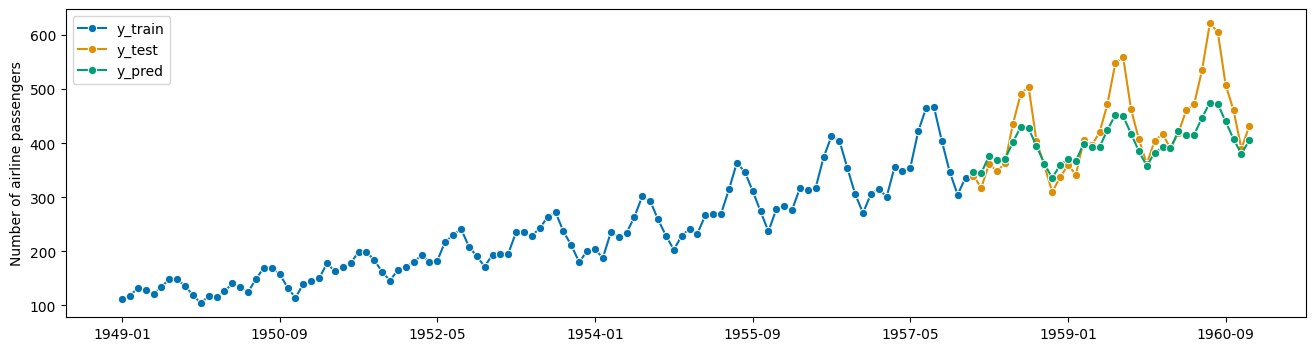

plot_series(y_train, y_test, y_pred, labels=["y_train", "y_test", "y_pred"])

mean_absolute_percentage_error(y_test, y_pred, symmetric=False)

[97]:

0.1396997352629755

In the above example, the TransformedTargetForecaster is constructed with a list of steps, each a pair of name and estimator, where the last estimator is a forecaster scitype. The pre-processing transformers should be series-to-series transformers which possess both a transform and an inverse_transform method. The resulting estimator is of forecaster scitype and has all interface defining methods. In fit, all transformers apply fit_transforms to the data, then the

forecaster’s fit; in predict, first the forecaster’s predict is applied, then the transformers’ inverse_transform in reverse order.

The same pipeline, as above, can also be constructed with the multiplication dunder method *.

This creates a TransformedTargetForecaster as above, with components given default names.

[98]:

forecaster = Deseasonalizer(model="multiplicative", sp=12) * ARIMA()

forecaster

[98]:

TransformedTargetForecaster(steps=[Deseasonalizer(model='multiplicative',

sp=12),

ARIMA()])In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

TransformedTargetForecaster(steps=[Deseasonalizer(model='multiplicative',

sp=12),

ARIMA()])The names in a dunder constructed pipeline are made unique in case, e.g., two deseasonalizers are used.

Example of a multiple seasonality model:

[99]:

forecaster = (

Deseasonalizer(model="multiplicative", sp=12)

* Deseasonalizer(model="multiplicative", sp=3)

* ARIMA()

)

forecaster.get_params()

[99]:

{'steps': [Deseasonalizer(model='multiplicative', sp=12),

Deseasonalizer(model='multiplicative', sp=3),

ARIMA()],

'Deseasonalizer_1': Deseasonalizer(model='multiplicative', sp=12),

'Deseasonalizer_2': Deseasonalizer(model='multiplicative', sp=3),

'ARIMA': ARIMA(),

'Deseasonalizer_1__model': 'multiplicative',

'Deseasonalizer_1__sp': 12,

'Deseasonalizer_2__model': 'multiplicative',

'Deseasonalizer_2__sp': 3,

'ARIMA__concentrate_scale': False,

'ARIMA__enforce_invertibility': True,

'ARIMA__enforce_stationarity': True,

'ARIMA__hamilton_representation': False,

'ARIMA__maxiter': 50,

'ARIMA__measurement_error': False,

'ARIMA__method': 'lbfgs',

'ARIMA__mle_regression': True,

'ARIMA__order': (1, 0, 0),

'ARIMA__out_of_sample_size': 0,

'ARIMA__scoring': 'mse',

'ARIMA__scoring_args': None,

'ARIMA__seasonal_order': (0, 0, 0, 0),

'ARIMA__simple_differencing': False,

'ARIMA__start_params': None,

'ARIMA__suppress_warnings': False,

'ARIMA__time_varying_regression': False,

'ARIMA__trend': None,

'ARIMA__with_intercept': True}

We can also create a pipeline with post-processing transformations, these are transformations after the forecaster, in a dunder pipeline or a TransformedTargetForecaster.

Below is an example of a multiple seasonality model, with integer rounding post-processing of the predictions:

[100]:

from aeon.transformations.func_transform import FunctionTransformer

forecaster = ARIMA() * FunctionTransformer(lambda y: y.round())

forecaster.fit_predict(y, fh=fh).head(3)

[100]:

1958-01 334.0

1958-02 338.0

1958-03 317.0

Freq: M, dtype: float64

Both pre- and post-processing transformers can be present, in this case the post-processing transformations will be applied after the inverse-transform of the pre-processing ones.

[101]:

forecaster = (

Deseasonalizer(model="multiplicative", sp=12)

* Deseasonalizer(model="multiplicative", sp=3)

* ARIMA()

* FunctionTransformer(lambda y: y.round())

)

forecaster.fit_predict(y_train, fh=fh).head(3)

[101]:

Period

1958-01 339.0

1958-02 334.0

1958-03 381.0

Freq: M, dtype: float64

3.2.2 The Detrender as pipeline component¶

For detrending, we can use the Detrender. This is an estimator of series-to-transformer scitype that wraps an arbitrary forecaster. For example, for linear detrending, we can use PolynomialTrendForecaster to fit a linear trend, and then subtract/add it using the Detrender transformer inside TransformedTargetForecaster.

To understand better what happens, we first examine the detrender separately:

[102]:

from aeon.forecasting.trend import PolynomialTrendForecaster

from aeon.transformations.detrend import Detrender

[103]:

# linear detrending

forecaster = PolynomialTrendForecaster(degree=1)

transformer = Detrender(forecaster=forecaster)

yt = transformer.fit_transform(y_train)

# internally, the Detrender uses the in-sample predictions

# of the PolynomialTrendForecaster

forecaster = PolynomialTrendForecaster(degree=1)

fh_ins = -np.arange(len(y_train)) # in-sample forecasting horizon

y_pred = forecaster.fit(y_train).predict(fh=fh_ins)

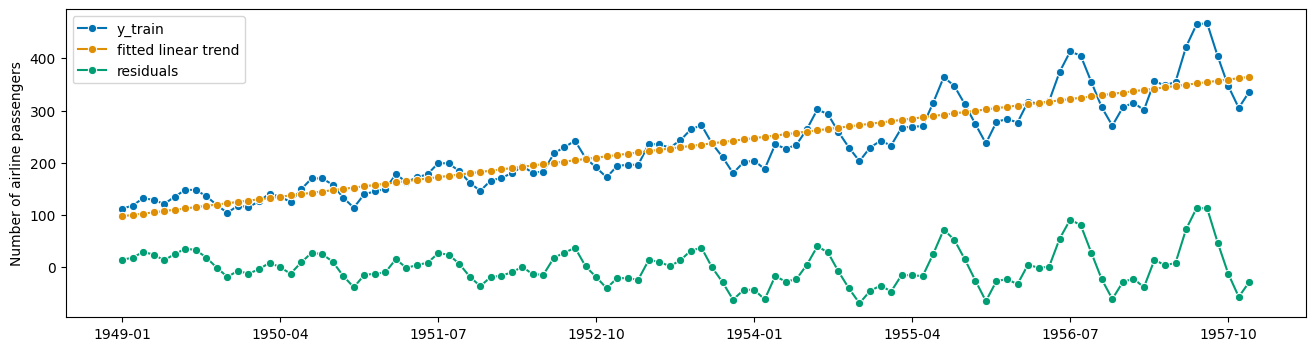

plot_series(y_train, y_pred, yt, labels=["y_train", "fitted linear trend", "residuals"]);

Since the Detrender is of scitype series-to-series-transformer, it can be used in the TransformedTargetForecaster for detrending any forecaster:

[104]:

forecaster = TransformedTargetForecaster(

[

("deseasonalize", Deseasonalizer(model="multiplicative", sp=12)),

("detrend", Detrender(forecaster=PolynomialTrendForecaster(degree=1))),

("forecast", ARIMA()),

]

)

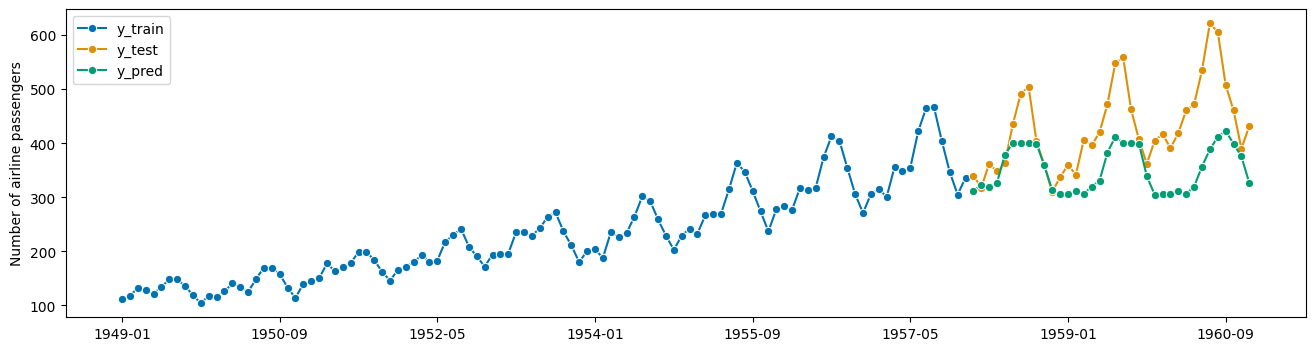

forecaster.fit(y_train)

y_pred = forecaster.predict(fh)

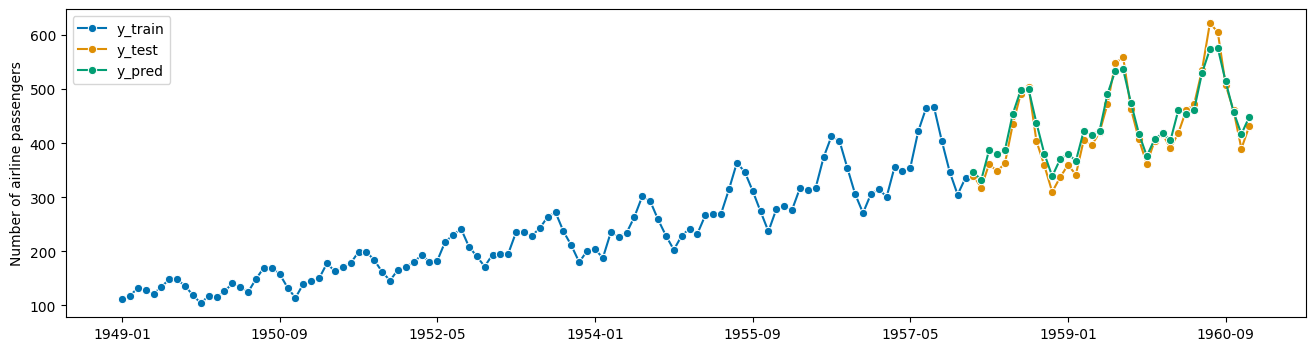

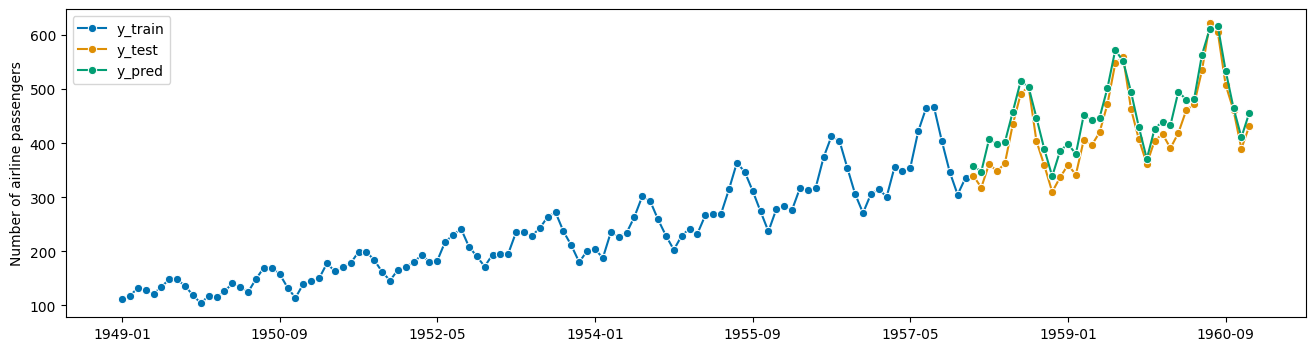

plot_series(y_train, y_test, y_pred, labels=["y_train", "y_test", "y_pred"])

mean_absolute_percentage_error(y_test, y_pred, symmetric=False)

[104]:

0.0561016822006403

3.2.3 Complex pipeline composites and parameter inspection¶

aeon follows the scikit-learn philosophy of composability and nested parameter inspection. As long as an estimator has the right scitype, it can be used as part of any composition principle requiring that scitype. Above, we have already seen the example of a forecaster inside a Detrender, which is an estimator of scitype series-to-series-transformer, with one component of forecaster scitype. Similarly, in a TransformedTargetForecaster, we can use the reduction composite from

Section 3.1 as the last forecaster element in the pipeline, which inside has an estimator of tabular regressor scitype, the KNeighborsRegressor:

[105]:

from sklearn.neighbors import KNeighborsRegressor

from aeon.forecasting.compose import make_reduction

[106]:

forecaster = TransformedTargetForecaster(

[

("deseasonalize", Deseasonalizer(model="multiplicative", sp=12)),

("detrend", Detrender(forecaster=PolynomialTrendForecaster(degree=1))),

(

"forecast",

make_reduction(

KNeighborsRegressor(),

window_length=15,

strategy="recursive",

),

),

]

)

forecaster.fit(y_train)

y_pred = forecaster.predict(fh)

plot_series(y_train, y_test, y_pred, labels=["y_train", "y_test", "y_pred"])

mean_absolute_percentage_error(y_test, y_pred, symmetric=False)

[106]:

0.05870838788931646

As with scikit-learn models, we can inspect and access parameters of any component via get_params and set_params:

[107]:

forecaster.get_params()

[107]:

{'steps': [('deseasonalize', Deseasonalizer(model='multiplicative', sp=12)),

('detrend', Detrender(forecaster=PolynomialTrendForecaster())),

('forecast',

RecursiveTabularRegressionForecaster(estimator=KNeighborsRegressor(),

window_length=15))],

'deseasonalize': Deseasonalizer(model='multiplicative', sp=12),

'detrend': Detrender(forecaster=PolynomialTrendForecaster()),

'forecast': RecursiveTabularRegressionForecaster(estimator=KNeighborsRegressor(),

window_length=15),

'deseasonalize__model': 'multiplicative',

'deseasonalize__sp': 12,

'detrend__forecaster__degree': 1,

'detrend__forecaster__regressor': None,

'detrend__forecaster__with_intercept': True,

'detrend__forecaster': PolynomialTrendForecaster(),

'detrend__model': 'additive',

'forecast__estimator__algorithm': 'auto',

'forecast__estimator__leaf_size': 30,

'forecast__estimator__metric': 'minkowski',

'forecast__estimator__metric_params': None,

'forecast__estimator__n_jobs': None,

'forecast__estimator__n_neighbors': 5,

'forecast__estimator__p': 2,

'forecast__estimator__weights': 'uniform',

'forecast__estimator': KNeighborsRegressor(),

'forecast__pooling': 'local',

'forecast__transformers': None,

'forecast__window_length': 15}

3.3 Parameter tuning¶

aeon provides parameter tuning strategies as compositors of forecaster scitype, similar to scikit-learn’s GridSearchCV.

3.3.1 Basic tuning using ForecastingGridSearchCV¶

The compositor ForecastingGridSearchCV (and other tuners) are constructed with a forecaster to tune, a cross-validation constructor, a scikit-learn parameter grid, and parameters specific to the tuning strategy. Cross-validation constructors follow the scikit-learn interface for re-samplers, and can be slotted in exchangeably.

As an example, we show tuning of the window length in the reduction compositor from Section 3.1, using temporal sliding window tuning:

[108]:

from sklearn.neighbors import KNeighborsRegressor

from aeon.forecasting.compose import make_reduction

from aeon.forecasting.model_selection import (

ForecastingGridSearchCV,

SlidingWindowSplitter,

)

[142]:

regressor = KNeighborsRegressor()

forecaster = make_reduction(regressor, window_length=15, strategy="recursive")

param_grid = {"window_length": [7, 12, 15]}

# We fit the forecaster on an initial window which is 80% of the historical data

# then use temporal sliding window cross-validation to find the optimal hyper-parameters

cv = SlidingWindowSplitter(initial_window=int(len(y_train) * 0.8), window_length=20)

gscv = ForecastingGridSearchCV(

forecaster, strategy="refit", cv=cv, param_grid=param_grid

)

As with other composites, the resulting forecaster provides the unified interface of aeon forecasters - window splitting, tuning, etc requires no manual effort and is done behind the unified interface:

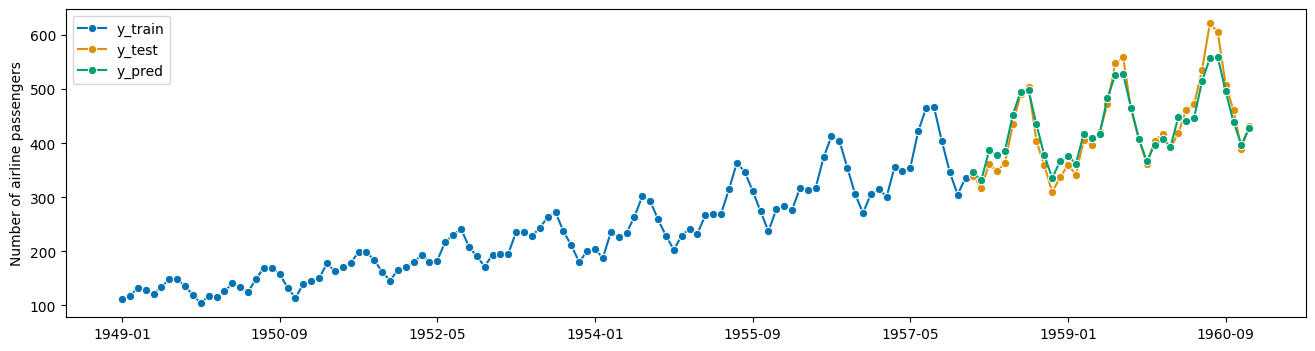

[143]:

gscv.fit(y_train)

y_pred = gscv.predict(fh)

plot_series(y_train, y_test, y_pred, labels=["y_train", "y_test", "y_pred"])

mean_absolute_percentage_error(y_test, y_pred, symmetric=False)

[143]:

0.16607972017556033

Tuned parameters can be accessed in the best_params_ attribute:

[111]:

gscv.best_params_

[111]:

{'window_length': 7}

An instance of the best forecaster, with hyper-parameters set, can be retrieved by accessing the best_forecaster_ attribute:

[112]:

gscv.best_forecaster_

[112]:

RecursiveTabularRegressionForecaster(estimator=KNeighborsRegressor(),

window_length=7)In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

RecursiveTabularRegressionForecaster(estimator=KNeighborsRegressor(),

window_length=7)KNeighborsRegressor()

KNeighborsRegressor()

3.3.2 Tuning of complex composites¶

As in scikit-learn, parameters of nested components can be tuned by accessing their get_params key - by default this is [estimatorname]__[parametername] if [estimatorname] is the name of the component, and [parametername] the name of a parameter within the estimator [estimatorname].

For example, below we tune the KNeighborsRegressor component’s n_neighbors, in addition to tuning window_length. The tuneable parameters can easily be queried using forecaster.get_params().

[113]:

from sklearn.neighbors import KNeighborsRegressor

from aeon.forecasting.compose import make_reduction

from aeon.forecasting.model_selection import (

ForecastingGridSearchCV,

SlidingWindowSplitter,

)

[114]:

param_grid = {"window_length": [7, 12, 15], "estimator__n_neighbors": np.arange(1, 10)}

regressor = KNeighborsRegressor()

forecaster = make_reduction(regressor, strategy="recursive")

cv = SlidingWindowSplitter(initial_window=int(len(y_train) * 0.8), window_length=30)

gscv = ForecastingGridSearchCV(forecaster, cv=cv, param_grid=param_grid)

[115]:

gscv.fit(y_train)

y_pred = gscv.predict(fh)

plot_series(y_train, y_test, y_pred, labels=["y_train", "y_test", "y_pred"])

mean_absolute_percentage_error(y_test, y_pred, symmetric=False)

[115]:

0.13988948769413537

[116]:

gscv.best_params_

[116]:

{'estimator__n_neighbors': 2, 'window_length': 12}

An alternative to the above is tuning the regressor separately, using scikit-learn’s GridSearchCV and a separate parameter grid. As this does not use the “overall” performance metric to tune the inner regressor, performance of the composite forecaster may vary.

[117]:

from sklearn.model_selection import GridSearchCV

# tuning the 'n_estimator' hyperparameter of RandomForestRegressor from scikit-learn

regressor_param_grid = {"n_neighbors": np.arange(1, 10)}

forecaster_param_grid = {"window_length": [7, 12, 15]}

# create a tunnable regressor with GridSearchCV

regressor = GridSearchCV(KNeighborsRegressor(), param_grid=regressor_param_grid)

forecaster = make_reduction(regressor, strategy="recursive")

cv = SlidingWindowSplitter(initial_window=int(len(y_train) * 0.8), window_length=30)

gscv = ForecastingGridSearchCV(forecaster, cv=cv, param_grid=forecaster_param_grid)

[118]:

gscv.fit(y_train)

y_pred = gscv.predict(fh)

plot_series(y_train, y_test, y_pred, labels=["y_train", "y_test", "y_pred"])

mean_absolute_percentage_error(y_test, y_pred, symmetric=False)

[118]:

0.14493362646957736

NOTE: a smart implementation of this would use caching to save partial results from the inner tuning and reduce runtime substantially - currently aeon does not support this. Consider helping to improve aeon.

3.3.3 Selecting the metric and retrieving scores¶

All tuning algorithms in aeon allow the user to set a score; for forecasting the default is mean absolute percentage error. The score can be set using the score argument, to any scorer function or class, as in Section 1.3.

Re-sampling tuners retain performances on individual forecast re-sample folds, which can be retrieved from the cv_results_ argument after the forecaster has been fit via a call to fit.

In the above example, using the mean squared error instead of the mean absolute percentage error for tuning would be done by defining the forecaster as follows:

[119]:

from aeon.performance_metrics.forecasting import mean_squared_error

[120]:

mse = mean_squared_error

param_grid = {"window_length": [7, 12, 15]}

regressor = KNeighborsRegressor()

cv = SlidingWindowSplitter(initial_window=int(len(y_train) * 0.8), window_length=30)

gscv = ForecastingGridSearchCV(forecaster, cv=cv, param_grid=param_grid, scoring=mse)

The performances on individual folds can be accessed as follows, after fitting:

[121]:

gscv.fit(y_train)

gscv.cv_results_

[121]:

| mean_test_mean_squared_error | mean_fit_time | mean_pred_time | params | rank_test_mean_squared_error | |

|---|---|---|---|---|---|

| 0 | 2600.750255 | 0.072074 | 0.002209 | {'window_length': 7} | 3.0 |

| 1 | 1134.999053 | 0.097644 | 0.002344 | {'window_length': 12} | 1.0 |

| 2 | 1285.133614 | 0.117036 | 0.003169 | {'window_length': 15} | 2.0 |

3.4 autoML aka automated model selection, ensembling and hedging¶

aeon provides a number of compositors for ensembling and automated model selection. In contrast to tuning, which uses data-driven strategies to find optimal hyper-parameters for a fixed forecaster, the strategies in this section combine or select on the level of estimators, using a collection of forecasters to combine or select from.

The strategies discussed in this section are: * autoML aka automated model selection * simple ensembling * prediction weighted ensembles with weight updates, and hedging strategies

3.4.1 autoML aka automatic model selection, using tuning plus multiplexer¶

The most flexible way to perform model selection over forecasters is by using the MultiplexForecaster, which exposes the choice of a forecaster from a list as a hyper-parameter that is tunable by generic hyper-parameter tuning strategies such as in Section 3.3.

In isolation, MultiplexForecaster is constructed with a named list forecasters, of forecasters. It has a single hyper-parameter, selected_forecaster, which can be set to the name of any forecaster in forecasters, and behaves exactly like the forecaster keyed in forecasters by selected_forecaster.

[122]:

from aeon.forecasting.compose import MultiplexForecaster

from aeon.forecasting.exp_smoothing import ExponentialSmoothing

from aeon.forecasting.naive import NaiveForecaster

[123]:

forecaster = MultiplexForecaster(

forecasters=[

("naive", NaiveForecaster(strategy="last")),

("ets", ExponentialSmoothing(trend="add", sp=12)),

],

)

[124]:

forecaster.set_params(**{"selected_forecaster": "naive"})

# now forecaster behaves like NaiveForecaster(strategy="last")

[124]:

MultiplexForecaster(forecasters=[('naive', NaiveForecaster()),

('ets',

ExponentialSmoothing(sp=12, trend='add'))],

selected_forecaster='naive')In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

MultiplexForecaster(forecasters=[('naive', NaiveForecaster()),

('ets',

ExponentialSmoothing(sp=12, trend='add'))],

selected_forecaster='naive')[125]:

forecaster.set_params(**{"selected_forecaster": "ets"})

# now forecaster behaves like ExponentialSmoothing(trend="add", sp=12))

[125]:

MultiplexForecaster(forecasters=[('naive', NaiveForecaster()),

('ets',

ExponentialSmoothing(sp=12, trend='add'))],

selected_forecaster='ets')In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

MultiplexForecaster(forecasters=[('naive', NaiveForecaster()),

('ets',

ExponentialSmoothing(sp=12, trend='add'))],

selected_forecaster='ets')The MultiplexForecaster is not too useful in isolation, but allows for flexible autoML when combined with a tuning wrapper. The below defines a forecaster that selects one of NaiveForecaster and ExponentialSmoothing by sliding window tuning as in Section 3.3.

Combined with rolling use of the forecaster via the update functionality (see Section 1.4), the tuned multiplexer can switch back and forth between NaiveForecaster and ExponentialSmoothing, depending on performance, as time progresses.

[126]:

from aeon.forecasting.model_selection import (

ForecastingGridSearchCV,

SlidingWindowSplitter,

)

[127]:

forecaster = MultiplexForecaster(

forecasters=[

("naive", NaiveForecaster(strategy="last")),

("ets", ExponentialSmoothing(trend="add", sp=12)),

]

)

cv = SlidingWindowSplitter(initial_window=int(len(y_train) * 0.5), window_length=30)

forecaster_param_grid = {"selected_forecaster": ["ets", "naive"]}

gscv = ForecastingGridSearchCV(forecaster, cv=cv, param_grid=forecaster_param_grid)

[128]:

gscv.fit(y_train)

y_pred = gscv.predict(fh)

plot_series(y_train, y_test, y_pred, labels=["y_train", "y_test", "y_pred"])

mean_absolute_percentage_error(y_test, y_pred, symmetric=False)

[128]:

0.19886711926999853

As with any tuned forecaster, best parameters and an instance of the tuned forecaster can be retrieved using best_params_ and best_forecaster_:

[129]:

gscv.best_params_

[129]:

{'selected_forecaster': 'naive'}

[130]:

gscv.best_forecaster_

[130]:

MultiplexForecaster(forecasters=[('naive', NaiveForecaster()),

('ets',

ExponentialSmoothing(sp=12, trend='add'))],

selected_forecaster='naive')In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

MultiplexForecaster(forecasters=[('naive', NaiveForecaster()),

('ets',

ExponentialSmoothing(sp=12, trend='add'))],

selected_forecaster='naive')3.4.2 autoML: selecting transformer combinations via OptimalPassthrough¶

aeon also provides capabilities for automated selection of pipeline components inside a pipeline, i.e., pipeline structure. This is achieved with the OptionalPassthrough transformer.

The OptionalPassthrough transformer allows to tune whether a transformer inside a pipeline is applied to the data or not. For example, if we want to tune whether sklearn.StandardScaler is bringing an advantage to the forecast or not, we wrap it in OptionalPassthrough. Internally, OptionalPassthrough has a hyperparameter passthrough: bool that is tuneable; when False the composite behaves like the wrapped transformer, when True, it ignores the transformer within.

To make effective use of OptionalPasstrhough, define a suitable parameter set using the __ (double underscore) notation familiar from scikit-learn. This allows to access and tune attributes of nested objects like TabularToSeriesAdaptor(StandardScaler()). We can use __ multiple times if we have more than two levels of nesting.

In the following example, we take a deseasonalize/scale pipeline and tune over the four possible combinations of deseasonalizer and scaler being included in the pipeline yes/no (2 times 2 = 4); as well as over the forecaster’s and the scaler’s parameters.

Note: this could be arbitrarily combined with MultiplexForecaster, as in Section 3.4.1, to select over pipeline architecture as well as over pipeline structure.

Note: scikit-learn and aeon do not support conditional parameter sets at current (unlike, e.g., the mlr3 package). This means that the grid search will optimize over the scaler’s parameters even when it is skipped. Designing/implementing this capability would be an interesting area for contributions or research.

[131]:

from sklearn.preprocessing import StandardScaler

from aeon.forecasting.compose import TransformedTargetForecaster

from aeon.forecasting.model_selection import (

ForecastingGridSearchCV,

SlidingWindowSplitter,

)

from aeon.forecasting.naive import NaiveForecaster

from aeon.transformations.adapt import TabularToSeriesAdaptor